⬤ NVIDIA has introduced Nemotron 3 Nano, an advanced AI model built for large-scale agentic reasoning. The system uses a 30-billion parameter Mixture-of-Experts design but activates just 3 billion parameters per forward pass. This efficient architecture lets the model process context windows up to 1 million tokens while maintaining exceptional speed and performance.

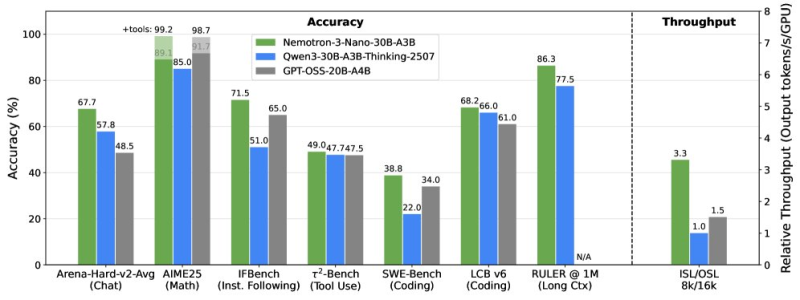

⬤ Performance testing shows Nemotron 3 Nano outpacing similar open models like Qwen-30B-A3B-Thinking and GPT-OSS-20B-A4B across multiple benchmarks. The model excels in conversational tasks (Arena-Hard), mathematical reasoning (AIME25), instruction-following (IFBench), and coding challenges (SWE-Bench). Its long-context capabilities stand out particularly, maintaining strong accuracy even when processing 1-million-token windows.

⬤ The speed advantage comes from activating only a fraction of available parameters during each processing pass, which reduces latency and accelerates output generation. This makes Nemotron 3 Nano particularly valuable for real-time applications requiring both scale and responsiveness.

⬤ Nemotron 3 Nano strengthens NVIDIA's position in the competitive AI market. The model's combination of high-throughput reasoning and scalability makes it suitable for enterprise AI deployments and real-time data processing. As organizations demand more efficient large-scale AI solutions, NVIDIA's architecture innovations position the company to maintain its semiconductor and AI industry leadership.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi