⬤ Kimi is getting a fresh look from the AI community after claims emerged that the company might be seriously undervalued. The buzz started when observers pointed out that Kimi's team has shipped solid releases like K2 while running on a valuation estimated at four to five times less than competing AI labs. The timing isn't random—new benchmark charts just dropped showing how Kimi stacks up against the big names in foundation models.

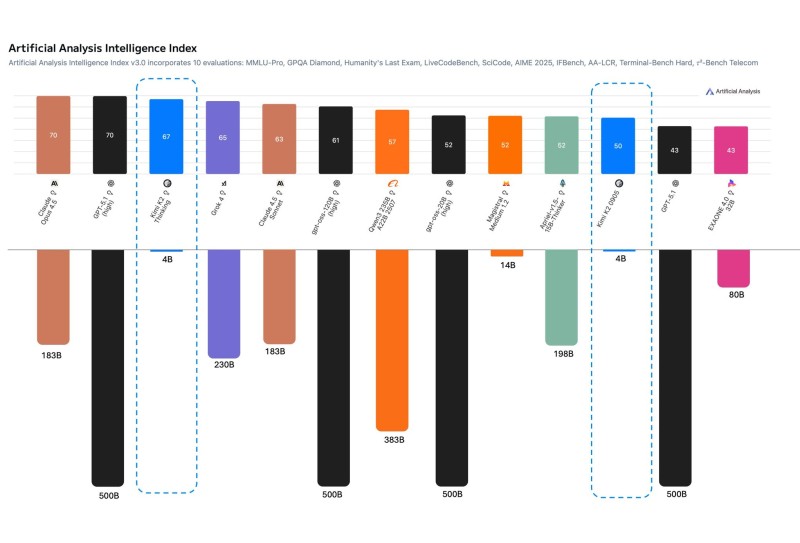

⬤ The performance data comes from the Artificial Analysis Intelligence Index and puts Kimi right alongside heavyweights like GPT-5, Claude 3.5, Grok-4, and Moonshot. Kimi K2 Think a landed near the middle of the pack, matching or beating several competitors that have way more funding behind them. Another version, Kimi L2 200B, also made the cut and held its own next to much larger frontier models. It's a pretty clear signal that Kimi is punching above its weight class given the resource gap.

⬤ This renewed interest comes at a time when benchmark scores are playing a bigger role in how people judge both capability and investment potential across the AI sector. The chart pulls from multiple tests—MMLU-Pro, GPQA Diamond, SciCode, LiveCodeBench, and others—giving a broader picture of what these models can actually do beyond just parameter counts. With systems ranging from 14B to 500B parameters in the comparison, Kimi's positioning shows the company is staying competitive against players with significantly deeper pockets.

⬤ The conversation around Kimi's valuation taps into wider debates about efficiency, capital deployment, and where the competitive edge really lies in the global AI race. As benchmark-driven assessments carry more weight in funding decisions, companies that deliver strong performance on leaner budgets could start attracting more attention. Kimi's presence in these rankings alongside much bigger models points to shifting dynamics in how the market evaluates value, capability, and future upside in the AI space.

Usman Salis

Usman Salis

Usman Salis

Usman Salis