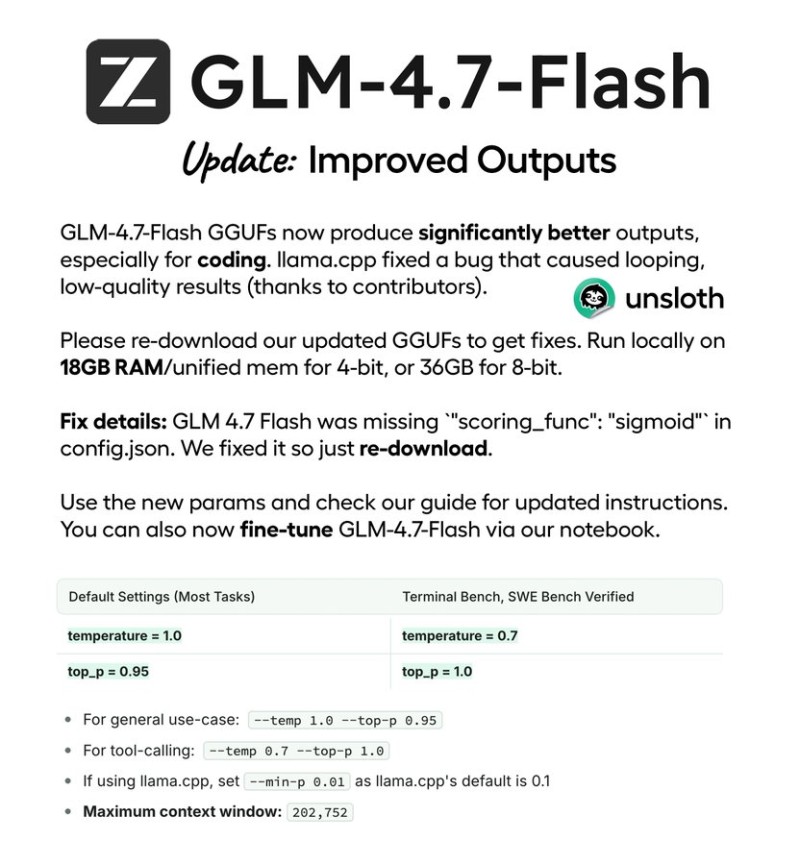

⬤ GLM-4.7-Flash just received a game-changing update that fixes a critical bug affecting local deployments. The team behind UnslothAI announced that they've reconverted the GGUF files after recent llama.cpp patches resolved an annoying looping issue that was causing degraded responses. If you've been running this model locally, you'll want to grab the updated files and tweak your inference settings to see the improvements.

⬤ Here's the exciting part: you can now run GLM-4.7-Flash in 4-bit quantization on systems with just 18GB of RAM or unified memory. The fix addressed a missing scoring configuration that was messing with output stability. Since the patch, users are reporting much more consistent results, especially when working on coding tasks where reliability really matters.

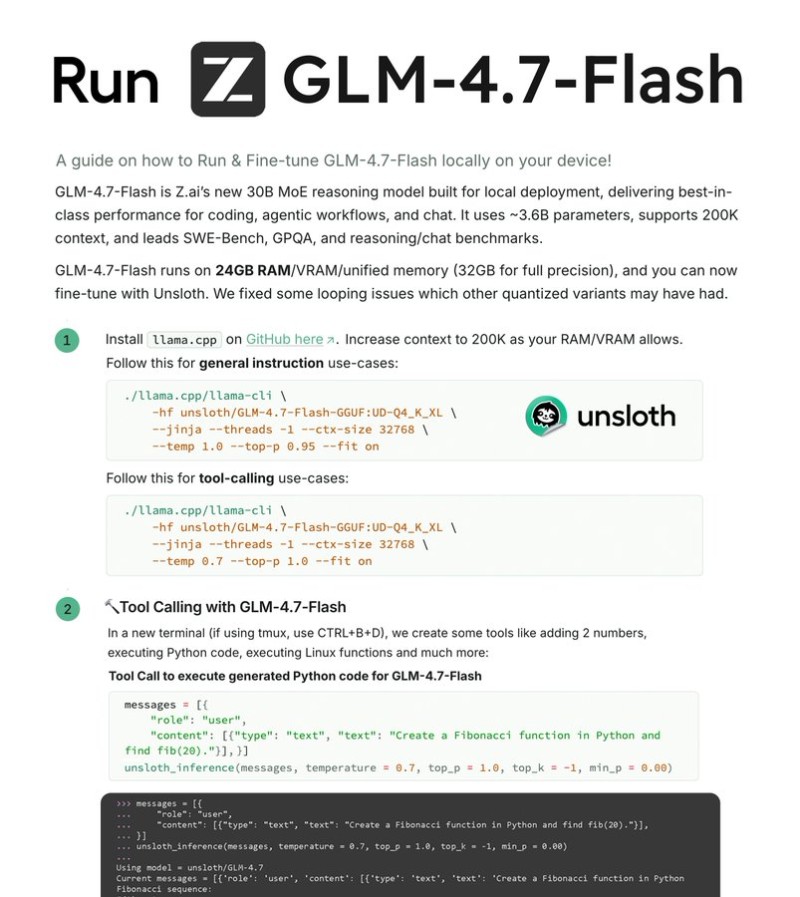

⬤ For context, GLM-4.7-Flash is a 30B mixture-of-experts reasoning model built specifically for local deployment. It handles up to 200K tokens in context and has shown solid performance on tough benchmarks like SWE-Bench and GPQA. The updated documentation now includes clearer runtime instructions for llama.cpp, with specific parameter recommendations for general use and tool-calling workflows, plus guidance if you want to fine-tune it using Unsloth's tools.

⬤ This update matters because it's part of a bigger movement toward practical, on-device AI. When models become easier to run locally with better quality and lower hardware requirements, developers get more flexibility and control over their AI workflows without relying on cloud services. Updates like this show that high-performance local inference is becoming a real alternative to centralized solutions.

Usman Salis

Usman Salis

Usman Salis

Usman Salis