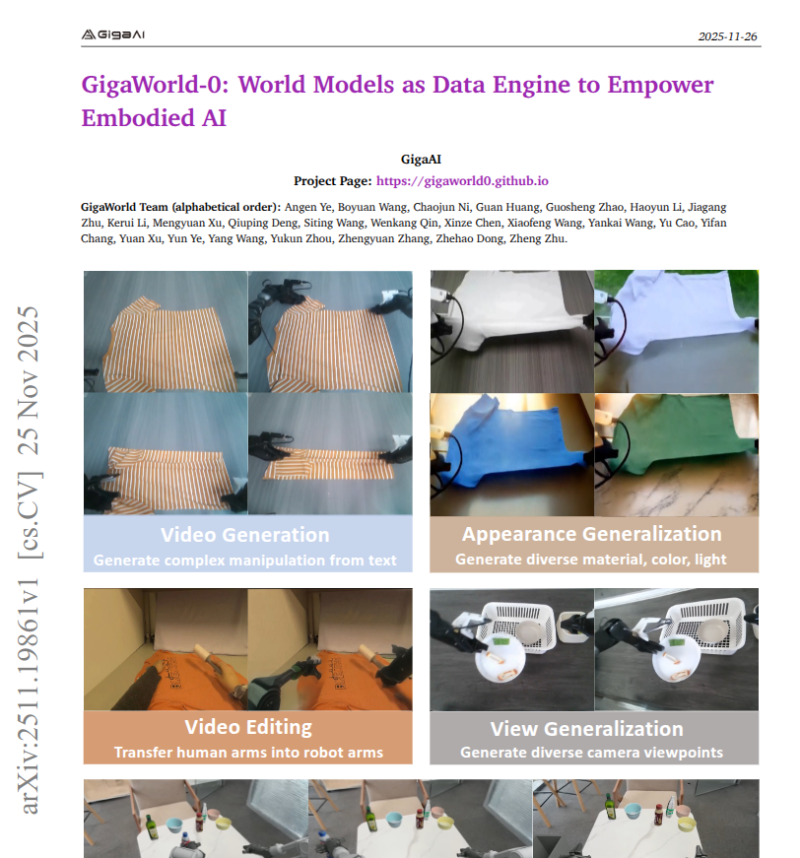

⬤ GigaAI just dropped GigaWorld-0, a world-model framework built to churn out synthetic robot experience at scale for embodied AI systems. The approach is pretty straightforward—robots with cameras and basic language commands learn what to do by training entirely on simulated data. The system shows off video generation, appearance tweaking, editing capabilities, and viewpoint adjustments that match what you'd expect from a solid simulation engine.

⬤ Here's the thing: robots trained purely on GigaWorld-0 synthetic data actually pulled off real household tasks like folding laundry and handling dishes without any real-world practice sessions. The system kicks off with a video model that generates or edits robot task footage while juggling textures, lighting, camera angles, and creating multiple possible outcomes from identical starting points. Extra tools make simulation videos look more lifelike, transform human demo footage into robot-arm movements, and enable viewpoint switches while keeping motion totally consistent.

⬤ GigaWorld-0 packs a second component that builds full 3D environments from scratch. This part reconstructs entire rooms, objects, and robot bodies with geometry that stays rock-solid across different camera views and physical movement. These 3D scenes get converted into videos and run through the system's variation tools, creating a seriously diverse training pipeline. Training on this mixed synthetic dataset beats relying on limited real-world samples every time.

⬤ The GigaWorld-0 launch shows the major momentum behind synthetic-data training setups that massively expand robotic learning capacity. This development reflects what's happening across embodied-AI research right now—simulation-first methods are becoming absolutely essential for speeding up progress, breaking free from data collection bottlenecks, and getting robotics closer to dependable real-world performance.

Peter Smith

Peter Smith

Peter Smith

Peter Smith