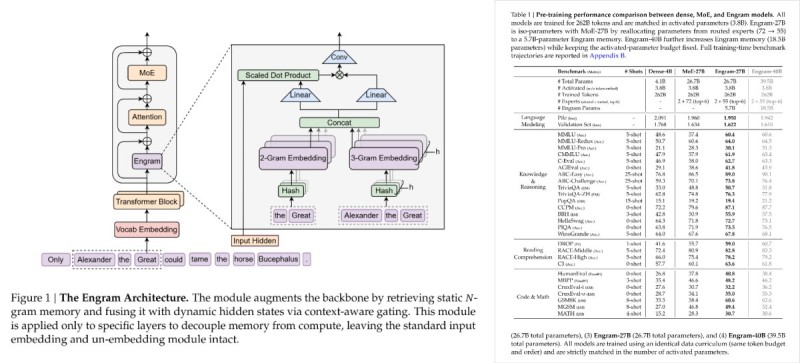

⬤ DeepSeek just published fresh research on Engram, a memory-focused module that changes how large language models handle information storage and retrieval. Engram takes a different route than traditional parameter expansion—it's built as a conditional and scalable memory system. The paper's architectural diagram breaks down how Engram works alongside standard transformer blocks, pulling in static n-gram memory and mixing it with dynamic hidden states through smart context-aware gating. The whole setup keeps the original input embedding and output layers intact.

⬤ The architecture shows Engram running parallel to attention mechanisms, grabbing compressed n-gram representations and blending them with model activations only at specific layers. This lets memory capacity scale up without dragging compute costs along for the ride—solving efficiency headaches that plague both dense models and Mixture-of-Experts approaches. Here's the kicker: unlike MoE routing, Engram doesn't pile on active parameters during inference, which means performance costs stay predictable.

⬤ Benchmark data in the paper stacks up dense models, MoE variants, and Engram-based architectures across language modeling, reasoning, reading comprehension, and coding tasks. With identical training budgets and token counts, Engram-equipped models hold their own and even pull ahead in several benchmarks—lower validation loss and stronger downstream performance. The takeaway? Memory augmentation might deliver better gains than just throwing more parameters at the problem.

⬤ The AI research crowd's paying attention. Industry watchers increasingly see Engram as a potential foundation for DeepSeek's upcoming models. While DeepSeek hasn't confirmed any product timelines, the research makes their strategy clear—they're betting on modular memory systems that scale efficiently. It's part of a bigger shift in advanced AI design toward splitting memory from compute, and Engram looks like a solid step in that direction for next-generation architectures.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov