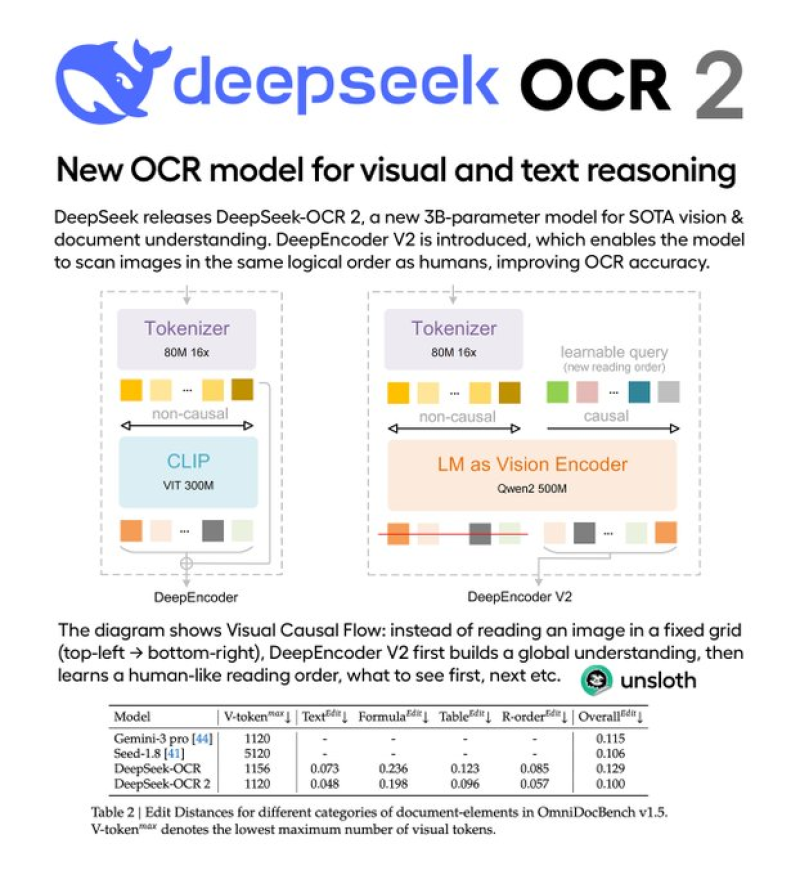

⬤ DeepSeek AI has rolled out DeepSeek-OCR 2, a 3 billion parameter visual-language model built to handle advanced optical character recognition and document interpretation. The model brings markdown-friendly document conversion, layout-free text extraction, and dynamic resolution support to the table, making it particularly useful for complex, mixed-layout scenarios where traditional OCR systems struggle.

⬤ The real game-changer here is DeepEncoder V2, which ditches the old fixed grid scanning approach. Instead of plowing through images in a rigid pattern, it uses causal visual flow to build a global understanding first, then figures out the reading order.

⬤ This mimics how humans actually read documents, focusing on structure and context rather than just left-to-right processing. The system packs up to 1,120 visual tokens and uses a language-model-as-vision-encoder design based on Qwen2.5 components.

⬤ Performance metrics from OmniDocBench v1.5 show DeepSeek-OCR 2 delivering lower edit distances across text blocks, formulas, tables, and reading order compared to existing solutions. The model runs on PyTorch, uses FlashAttention 2 for faster inference, and handles multilingual image-to-text workloads. Released under Apache 2.0 license, it's ready for deployment through Hugging Face Transformers.

⬤ As demand for reliable document digitization grows across enterprise and research settings, DeepSeek-OCR 2's improvements in reading order recognition and layout-independent extraction could shake up data processing, automation, and knowledge retrieval applications. The open-source release adds competitive pressure in the OCR and document AI space, potentially reshaping how organizations tackle document intelligence solutions.

Usman Salis

Usman Salis

Usman Salis

Usman Salis