● Anthropic just dropped sandbox support for Claude Code CLI, and it's a pretty big deal for anyone worried about AI security. The feature comes from announcements by cat and Thariq, who both emphasized how it makes coding with AI safer and less annoying.

● Here's the gist: the sandbox creates a wall between your actual system and what Claude Code can mess with. You approve specific actions and connections, and everything else gets blocked. Cat mentioned that internal tests showed an 84% drop in those constant permission pop-ups, which means you're only approving stuff that actually matters instead of clicking "yes" a hundred times.

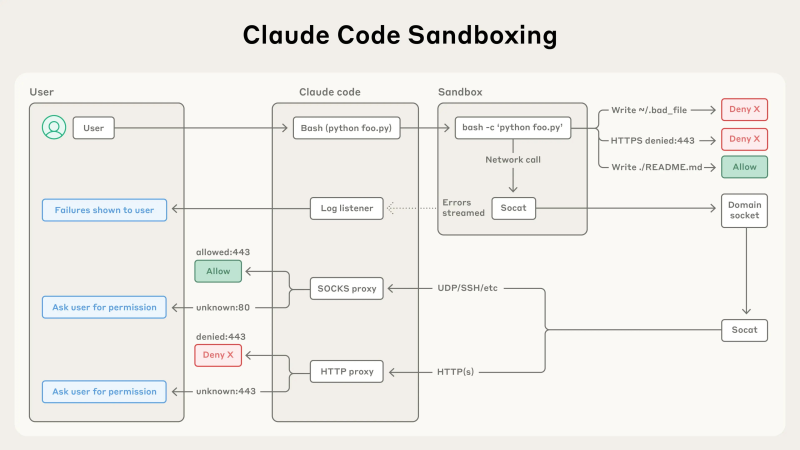

● The risks this tackles are pretty real — unauthorized file access, sketchy network calls, data leaks. The architecture only greenlights safe moves like writing a README or hitting approved HTTPS endpoints. Anything suspicious? Denied automatically, and you see exactly what failed.

● For companies, this could mean fewer security incidents and lower compliance costs. Instead of bolting on third-party security tools, the controls live right inside Claude's setup. That's useful for enterprises going all-in on AI without wanting to blow their security budget.

● The broader picture here is that AI governance is heating up globally, and Anthropic's putting themselves ahead of the curve with secure-by-design thinking. Activating it is dead simple — just type /sandbox and set your rules for files and hosts. As Thariq put it, you get to "define exactly which directories and network hosts your agent can access."

Usman Salis

Usman Salis

Usman Salis

Usman Salis