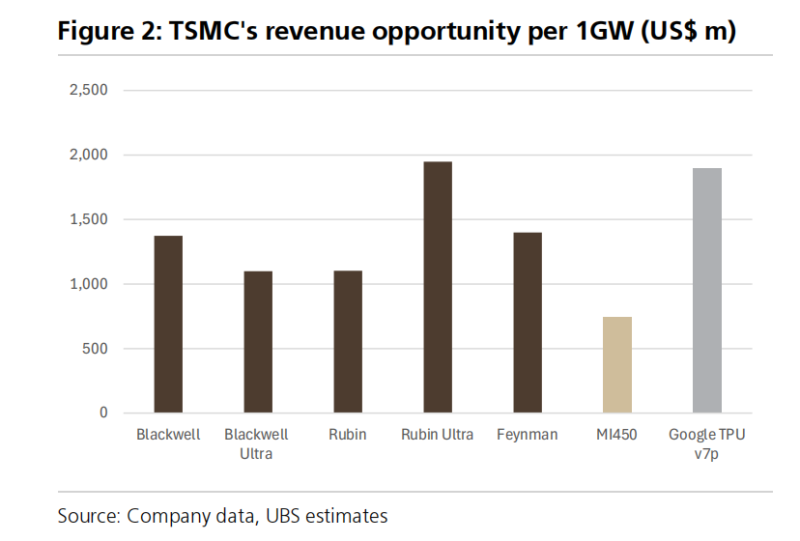

⬤ TSMC (TSM) is set to become one of the biggest winners in the global AI server infrastructure boom. UBS estimates that every 1GW server project now generates $1–2 billion in revenue for the foundry, thanks to the increasing silicon intensity of next-gen AI platforms. With OpenAI and its cloud partners planning a combined 26GW of compute deployments across NVIDIA, AMD, and Google systems, UBS calculates TSMC's revenue potential from these projects at $34.4 billion—a significant jump from earlier projections.

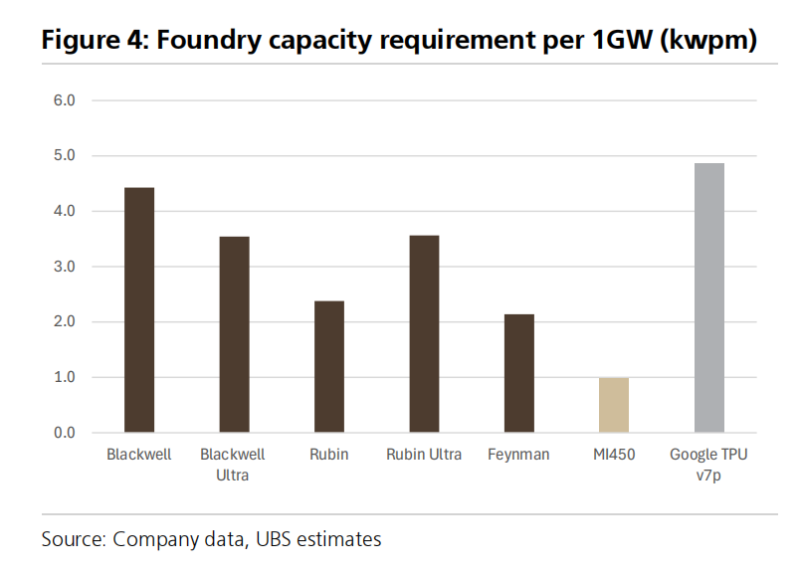

⬤ The numbers break down like this: TSMC could pull in $11.0 billion from NVIDIA's share of the build-out, $4.5 billion from AMD, and $18.9 billion from Google's AI infrastructure plans. Platform differences matter here—Blackwell Ultra and Rubin architectures generate around $1.1 billion per GW, while the shift to Rubin Ultra and Feynman pushes that range to $1.4–1.9 billion. Google's TPU v7p platform sits at the top end, delivering roughly $2.0 billion per GW. Each 1GW project alone represents about 1.0–1.5% of TSMC's projected 2025 sales.

⬤ UBS points out that the move toward more advanced architectures will keep driving TSMC's revenue concentration higher. The greater wafer requirements for new platforms—especially Rubin Ultra and Google TPU v7p—underscore TSMC's edge in advanced nodes. As hyperscale customers race to build multi-gigawatt clusters and AI models grow larger and more compute-hungry, TSMC's content share is positioned to climb.

⬤ These projections put TSMC right at the heart of the next wave of AI infrastructure spending, creating a strong multi-year earnings catalyst. With UBS forecasting up to $14.6 billion in operating profit from the announced projects, TSMC's role in powering large-scale AI deployments is becoming increasingly critical to global semiconductor dynamics and shaping expectations for capital investment, capacity expansion, and long-term industry leadership.

Peter Smith

Peter Smith

Peter Smith

Peter Smith