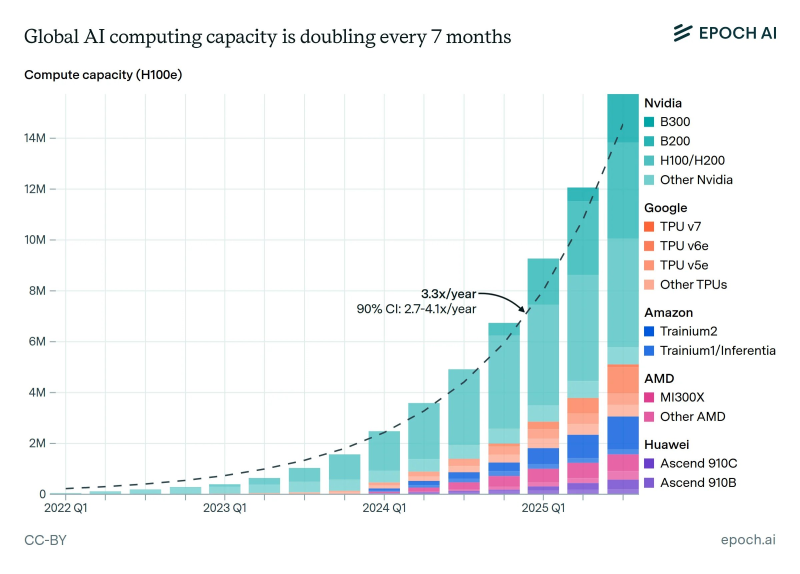

⬤ Global AI computing capacity is accelerating at a pace that leaves traditional technology growth in the dust. Worldwide AI compute now doubles approximately every seven months, translating to a 3.3x annual growth rate. Data from Epoch AI tracking 2022 through early 2025 shows total compute measured in H100-equivalent units climbing steeply quarter after quarter.

⬤ Nvidia dominates AI compute deployment by a wide margin. The company's H100 and H200 accelerators make up the bulk of total capacity, with newer B200 and B300 systems starting to add meaningful volume as deployments ramp up. Nvidia hardware accounts for the majority of global AI compute growth, cementing its central position in large-scale model training and inference infrastructure.

⬤ Major tech companies are scaling their custom silicon alongside Nvidia. Google's TPU platforms—including TPU v5e, v6e, and v7—show steady growth, while Amazon expands capacity through Trainium and Inferentia chips. AMD's MI300X accelerators and Huawei's Ascend processors contribute smaller but growing shares. Despite these alternatives increasing in absolute terms, their combined contribution remains well below Nvidia's overall dominance.

⬤ This explosive expansion in AI computing capacity matters because physical hardware availability has become the defining factor in AI progress. With compute scaling far faster than historical Moore's Law predictions, the pace of model development now depends heavily on capital investment and supply chains. The concentration of growth between Nvidia and major cloud providers shows how infrastructure buildout is shaping the next wave of artificial intelligence across global markets.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova