⬤ A team from Shanghai Jiao Tong University and Tencent has tackled one of the biggest headaches in AI-assisted programming: code that looks good but crashes when you actually try to run it. Their solution, AP2O-Coder, takes a different approach by treating coding failures as learning opportunities rather than random mistakes.

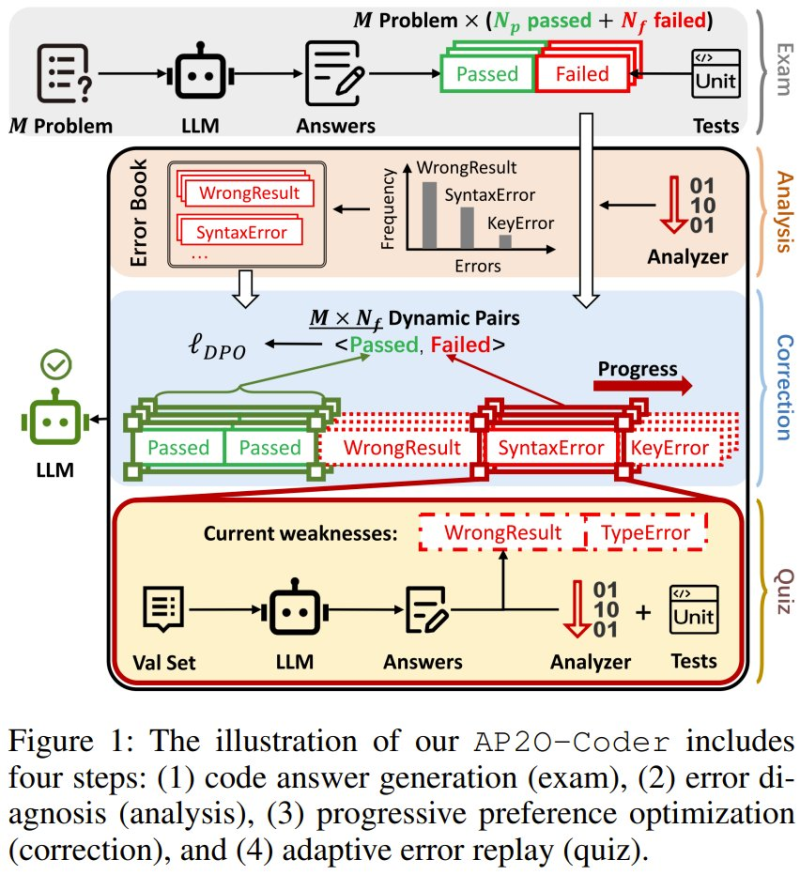

⬤ The system works through four clear stages. First, it generates code and runs unit tests. Then it analyzes what went wrong—whether that's syntax errors, incorrect results, or missing variables. The framework tracks which types of mistakes happen most often, then builds training examples that show the difference between working code and broken code for each specific problem type.

⬤ What makes AP2O-Coder different is how it trains models to fix their own weaknesses. Instead of generic improvement, it focuses on the exact error patterns each model struggles with most. The researchers tested this across Llama, Qwen, and DeepSeek models and found it worked better while needing far less training data than traditional methods. The final validation stage double-checks that the model actually learned to avoid its previous mistakes.

⬤ This represents a smarter way to improve AI coding tools. Rather than just throwing more computing power at the problem, AP2O-Coder shows that understanding why code fails leads to better solutions. For companies building software with AI assistance, automated testing systems, and developer tools, this kind of targeted error correction could mean fewer bugs and more reliable code generation in real-world applications.

Usman Salis

Usman Salis

Usman Salis

Usman Salis