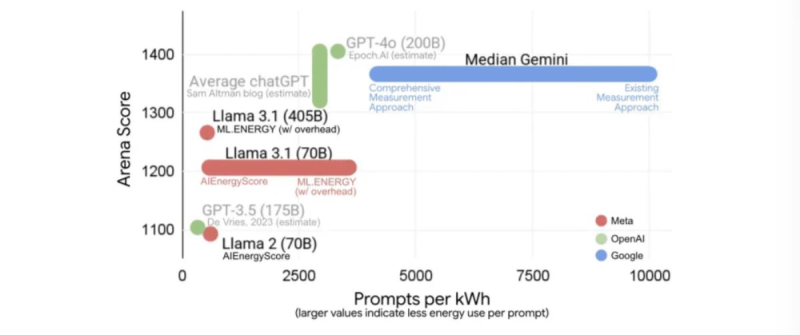

⬤ Google released new measurements showing how much less energy its AI text queries actually need. Public conversation about AI's environmental impact still leans on 2023 estimates that turned out to be nearly 100 times too high. Google's current data shows a typical Gemini prompt now requires just five drops of water, nine seconds of TV-equivalent electricity, and 0.03 grams of CO₂—a 33× efficiency gain over last year. The chart included in the announcement compares Gemini's performance with major models from OpenAI and Meta.

⬤ The visualization maps Arena Score against prompts per kilowatt-hour, where moving right means better efficiency. Gemini reaches close to 10,000 prompts per kWh, leaving GPT-4o, GPT-3.5, Llama 3.1, and Llama 2 well behind. OpenAI's models cluster around 3,000–4,000 prompts per kWh, while Meta's offerings sit between 1,000–2,500. The chart also marks earlier public estimates, highlighting how far outdated assumptions have drifted from Google's latest numbers.

⬤ Misleading claims about AI "draining aquifers" and "boiling oceans" keep circulating because media outlets rely on old research. While dramatic global warnings seem overblown at the per-prompt level, real concerns do exist—they're just local, connected to where datacenters sit and how regional grids handle power, not planetary-scale threats. Google's data suggests modern systems like Gemini need far fewer resources than headlines claim.

⬤ The efficiency breakthrough could reshape conversations around sustainability, datacenter planning, and long-term costs across the AI industry. For GOOGL, proving such major resource reductions helps strengthen its market position as efficiency becomes a bigger factor in large-scale AI rollouts.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah