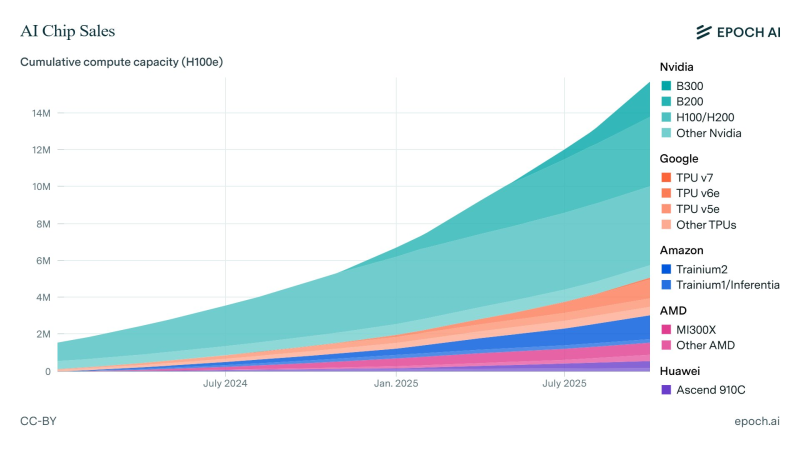

⬤ Global AI compute capacity just crossed a major milestone: over 15 million H100-equivalent chips, based on fresh data from Epoch AI. Their AI Chip Sales data explorer tracks total compute power across big players like Nvidia, Google, Amazon, AMD, and Huawei, giving us the clearest picture yet of how AI hardware is spread across the world.

⬤ The numbers show compute growth taking off from mid-2024 into 2025. Nvidia's hardware—mainly H100, H200, and newer chips—makes up the biggest chunk of that capacity. The gap between Nvidia and everyone else keeps widening as more companies install their accelerators for AI training and inference workloads.

⬤ Other chip makers are ramping up too. Google's TPUs, Amazon's Trainium and Inferentia, AMD's MI300 lineup, and Huawei's Ascend chips are all adding meaningful capacity. They're still smaller in H100-equivalent terms, but Epoch AI's standardized metric lets us compare these different architectures head-to-head.

⬤ Hitting 15 million H100-equivalents shows just how fast AI infrastructure is scaling worldwide. Demand for large model training and deployment keeps climbing, and the data reflects massive capital flowing into data centers and AI-optimized hardware across cloud providers and enterprises.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah