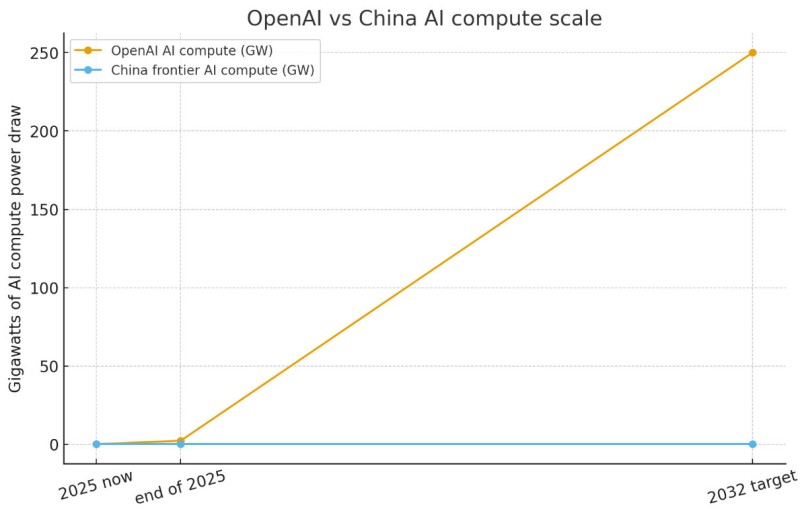

● A recent post by Chris revealed OpenAI's ambitious plan to build around 250 gigawatts (GW) of AI compute capacity by 2032. The numbers are staggering: that's about 865 times more than China's current frontier AI compute, which sits at just 0.289 GW.

● While China aims to reach 300 EFLOP/s by 2025 and double its data center electricity to 76 GW, they haven't published exact figures for dedicated AI training compute. The gap, however, is already massive.

● This kind of expansion would demand enormous energy resources, advanced chip supply chains, and a complete rethink of energy policy for AI. Experts worry about potential risks: supply bottlenecks, energy strain, and too much compute power concentrated in one company's hands.

● The financial stakes are just as huge. Building this infrastructure could require trillions in investment, potentially reshaping global energy and semiconductor markets. Some analysts expect policymakers might respond with new tax frameworks or green incentives to handle the environmental impact.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi