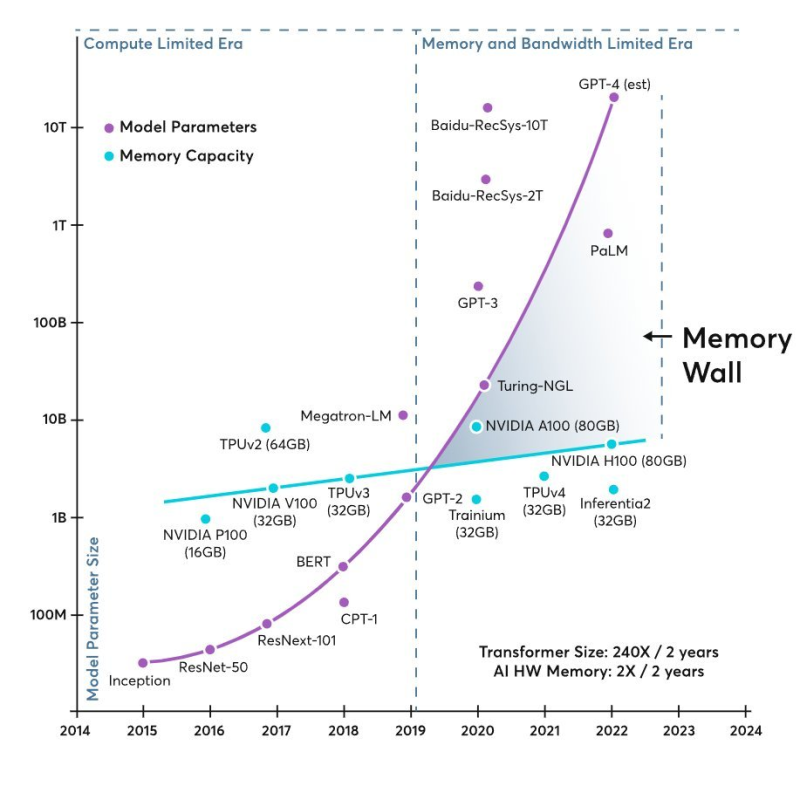

⬤ AI models are running into a serious hardware problem—they're getting too big, too fast for memory systems to handle. Between 2018 and 2025, transformer models ballooned roughly 19 times every two years, while memory per chip only managed to grow about 1.9 times. That's a massive gap. What used to be a compute problem—not enough processing power—has now become a memory and bandwidth problem. The bottleneck isn't how fast chips can calculate anymore; it's how quickly they can feed data to those calculations.

⬤ The numbers paint a stark picture. Over the last 20 years, compute performance shot up around 60,000 times. Memory bandwidth? Only about 100 times. Interconnect bandwidth between chips? Just 30 times. This creates what engineers call the "memory wall"—processors sitting idle, waiting for data to arrive instead of crunching numbers. It's especially brutal for large language model inference, which constantly needs to shuffle data around rather than doing heavy math in one place.

⬤ Training these models is even messier. A big model doesn't just need space for its weights—it needs room for activations, optimizer states, and all the temporary data generated during learning. In reality, training can eat up three to four times more memory than the model size suggests. When everything doesn't fit on one device, you're forced to constantly move weights, activations, and key-value caches between chips. That data movement becomes the slowest part of the whole process. Even when models technically fit in memory, bandwidth limits mean the compute units can't run at full throttle.

⬤ This isn't just a technical headache—it's reshaping the entire economics of AI. Memory movement and bandwidth now control both how fast models run and how much they cost to operate. Throwing more raw compute power at the problem doesn't help much anymore. The memory wall is now the defining challenge for scaling AI further. How the industry tackles memory technology, bandwidth improvements, and system design will determine whether generative AI can keep advancing at anything close to its current pace—and whether it becomes more affordable or prohibitively expensive to run at scale.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov