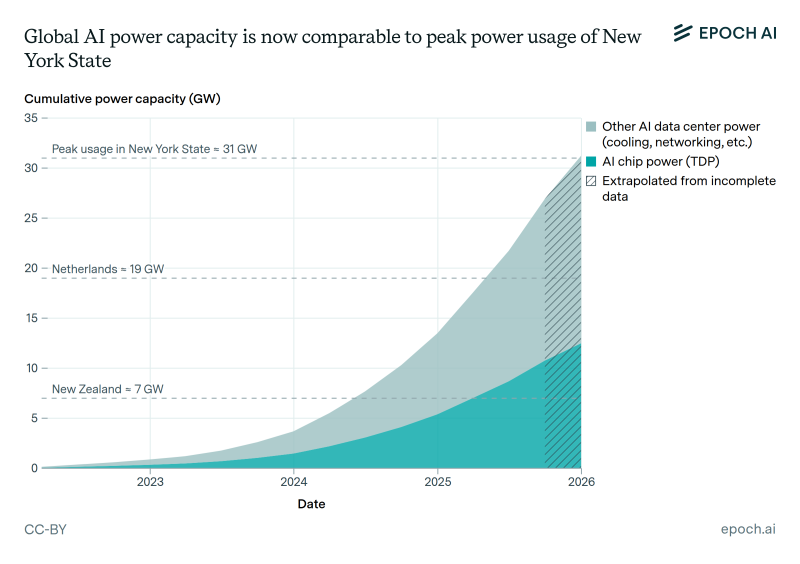

⬤ AI data centers across the globe have reached a major energy milestone, matching the electricity consumption of entire states. Recent findings from Epoch AI reveal that total AI data center capacity now sits at approximately 30 gigawatts (GW)—almost identical to New York State's peak demand of 31 GW during extreme summer heat.

⬤ The data tracks cumulative global AI power capacity from 2022 through projected 2026 levels, breaking down consumption into two key categories: the AI chips themselves and everything else needed to keep data centers running—cooling systems, networking equipment, and supporting infrastructure. While the processors doing the actual AI work eat up a huge chunk of electricity, all the auxiliary systems add substantially to the total, showing that AI's energy footprint goes way beyond just the chips.

⬤ To put these numbers in perspective, current AI data center capacity already dwarfs New Zealand's entire peak electricity demand of roughly 7 GW and exceeds the Netherlands' approximately 19 GW. This positions global AI infrastructure among the world's most concentrated electricity consumers, reflecting just how rapidly this technology is scaling up.

⬤ Some of the latest capacity figures are based on extrapolated data since complete information isn't yet available, meaning actual usage could shift as new facilities launch and existing ones expand. What's clear is that electricity access and grid infrastructure are becoming critical bottlenecks as AI deployment accelerates. The scale revealed by Epoch AI makes one thing obvious: AI data centers are now a massive and rapidly growing piece of worldwide power consumption, with serious implications for energy planning and infrastructure development.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov