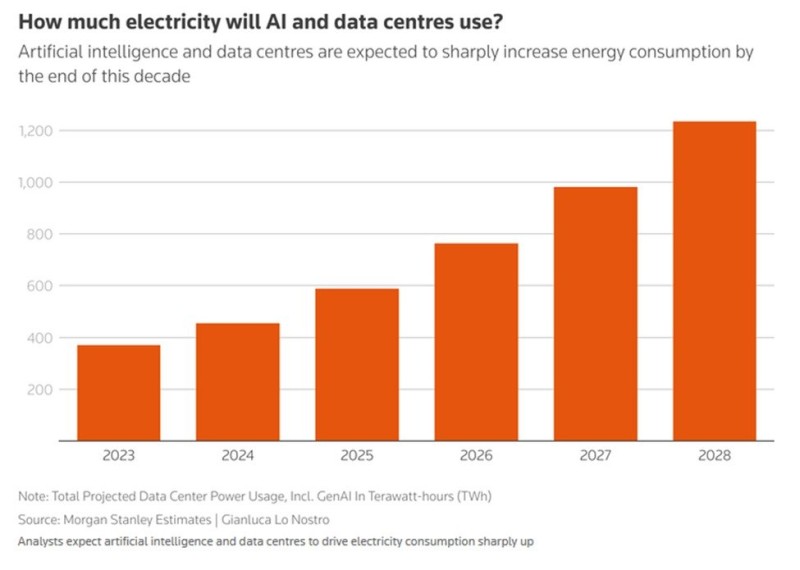

⬤ AI and data centers are gobbling up electricity at an unprecedented rate, and it's only going to get worse. Power consumption is expected to more than double by 2028, according to Morgan Stanley estimates. The data shows a relentless climb in total projected electricity usage, including generative AI workloads, from 2023 through 2028.

⬤ The numbers are staggering: total data center power usage is projected to jump by roughly 650 terawatt-hours between 2025 and 2028, hitting approximately 1,230 TWh in 2028. This isn't just a temporary blip—it's a sustained surge driven by the massive rollout of AI systems across cloud and enterprise infrastructure.

⬤ AI-specific electricity consumption has already shot up by about 200 TWh since 2023—a 55% increase—reaching an estimated 580 TWh, an all-time high. If current trends hold, data center electricity demand could triple within five years. Data centers now account for roughly 5% of total US electricity demand, a record share that highlights just how much AI infrastructure is reshaping national power systems.

⬤ This matters because energy availability is now a make-or-break factor for AI's future. The surge in electricity demand is putting serious pressure on power generation capacity, grid reliability, and infrastructure planning. As AI continues spreading across industries, energy supply constraints and costs will increasingly determine how fast, where, and how sustainably large-scale AI can actually grow.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov