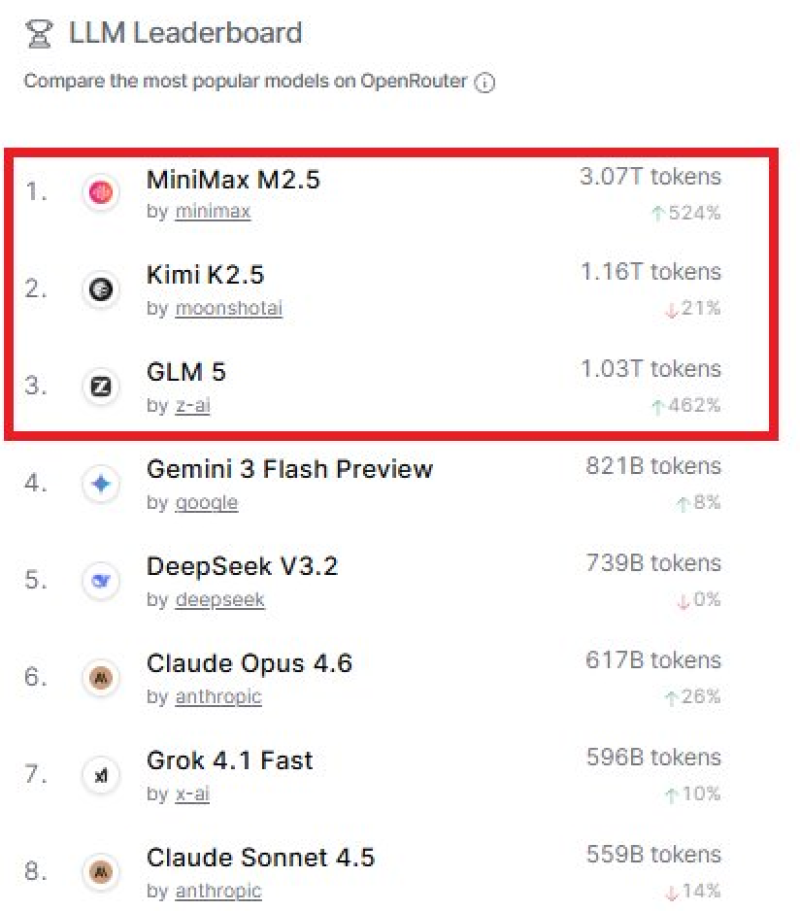

⬤ MiniMax M2.5 has climbed to the top of the LLM leaderboard by total tokens generated, outpacing every other listed model this week with roughly 3.07 trillion tokens. As one analyst noted, the market is clearly moving its attention away from lab benchmarks and toward actual deployment volume. That's a notable shift from coverage like MiniMax releases M2.5 open-source model with 802 SWEbench score, which centered almost entirely on performance metrics.

⬤ Kimi K2.5 sits second on the chart at around 1.16 trillion tokens, with GLM 5 close behind at 1.03 trillion. Claude Opus 4.6 leads SWErebench with 517 score, yet in terms of live token volume it lands further down the list at approximately 617 billion tokens generated. Benchmark headlines and real deployment numbers are telling very different stories right now.

⬤ The argument behind that quote is straightforward: traditional evaluation scores no longer reliably signal who is actually winning in production. That point connects to broader strategic moves happening across the ecosystem, including how Google DeepMind brings in Hume AI leadership through licensing deal, which points to a world where applied deployment scale matters more than isolated lab rankings.

⬤ If token generation volume keeps defining competitive standing, the models leading in live utilization could shape future pricing structures and commercial momentum across the sector. The era of benchmark-first positioning looks increasingly fragile.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi