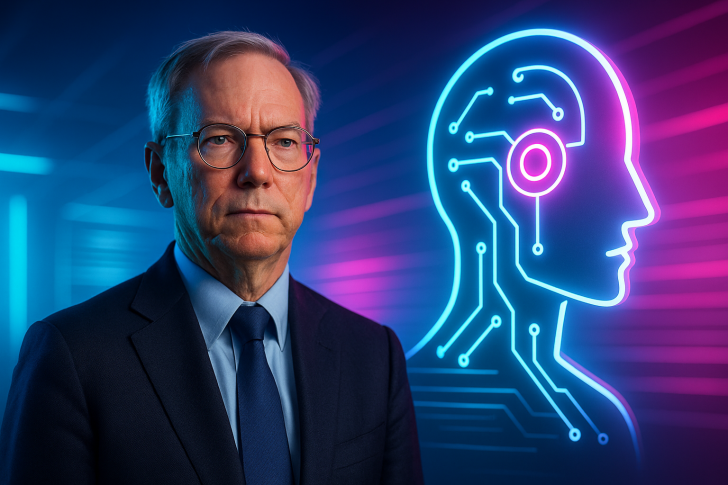

⬤ Tech giants are pushing forward with AI development as debates heat up over how these systems affect society. Former Google CEO Eric Schmidt recently made clear that AI's ultimate test is simple: does it respect human needs and individual freedom? He argues that AI systems capable of recognizing real emergencies and flexibly adjusting rules to help people will earn public trust far more easily than those that don't.

⬤ Schmidt stressed that AI models should expand human freedom, not limit it. Core values like freedom of thought, movement, and assembly must stay protected as AI weaves deeper into everyday life and business operations. His stance echoes growing industry discussions about responsible development, transparency, and making sure automated decisions don't trample on personal rights or autonomy.

⬤ These comments arrive as companies pour billions into next-gen AI powering everything from virtual assistants to cloud platforms. But as the technology embeds itself in critical systems, worries about surveillance, overreach, and government abuse are mounting. Schmidt warned that if AI or institutions deploy it to reduce freedom, expect fierce public pushback. He didn't mince words: he'd "join that fight" himself if AI became a tool for widespread control.

⬤ This adds fresh context to current market and policy debates around AI adoption. While companies race to commercialize stronger models, how these systems impact civil liberties will increasingly shape regulation, consumer attitudes, and global competition. Schmidt's message is clear: lasting progress in AI depends not just on raw capability but on alignment with democratic principles and genuine social acceptance.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah