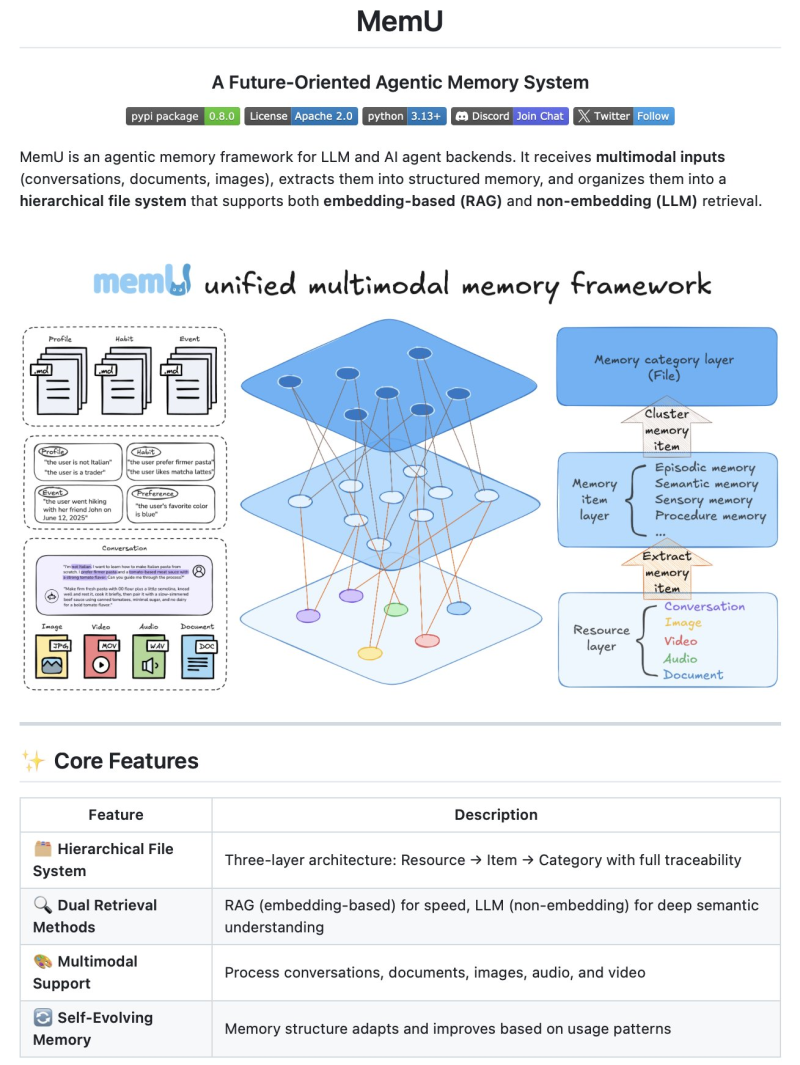

⬤ MemU just dropped as an open-source memory framework that changes how AI agents handle long-term information. Instead of stuffing everything into context windows or relying purely on vector search, it creates a structured memory system AI can actually read and think through. The platform stores conversations, documents, images, and audio in their original formats, then pulls out key "memory items" and groups them into clean Markdown files organized by theme.

⬤ The framework uses a three-step hierarchy that keeps everything traceable. Raw content gets captured first, converted into memory items second, then sorted into category files third. This design lets AI models interpret memory as readable content rather than just searching through an opaque database. MemU also runs dual-mode retrieval—combining embedding-based search like RAG with LLM-powered non-embedding methods. This combo helps agents find what matters while cutting down on hallucinations over time.

⬤ Built for multimodal use, MemU handles text, images, audio, and video across sessions. The memory structure evolves automatically as the agent figures out what's important. Content that gets accessed frequently moves up in priority and gets reorganized without manual intervention. This self-evolving approach means developers don't have to hardcode what information matters most.

⬤ Unlike most frameworks that treat memory like an index, MemU makes it something the model can understand and reason through directly. The architecture supports top-down retrieval with smart fallback options, scaling naturally as memory grows. Since it's fully open source and built with lightweight constraints, it's an accessible option for teams building AI agents that need persistent, interpretable memory systems.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov