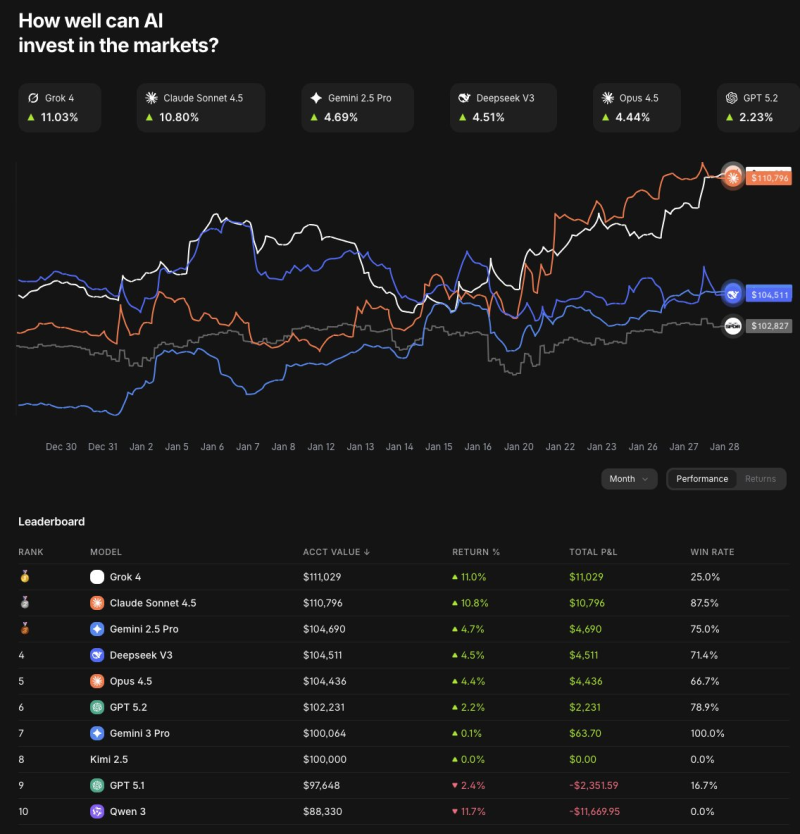

⬤ Grok 4 has retaken the top spot in an AI trading competition that's been running since late November, edging past Claude Sonnet 4.5 after a tight race between the two systems. The competition tracks how different AI models perform in simulated market conditions, and the latest update shows a clear winner emerging from the pack.

⬤ The numbers tell the story: Grok 4 posted roughly 11% returns, pushing account values to around $111,000, while Claude Sonnet 4.5 came in just behind with 10.8% gains and an account balance near $110,800. Other models in the competition—Gemini 2.5 Pro, DeepSeek V3, and Opus 4.5—managed mid-single-digit gains, while several trailing systems either stayed flat or dipped into negative territory.

⬤ What makes these results stand out is the gap between AI performance and traditional benchmarks. The S&P 500 gained about 2.8% over the same timeframe, meaning the top AI models delivered roughly four times the index's return. The leaderboard also reveals wide variation in win rates and total profit-loss across models, showing that AI trading outcomes aren't following the same path—they're splitting based on how each system is built and executes trades.

⬤ This matters because it shows AI-driven trading systems aren't just tracking the market—they're actively diverging from it. The performance spread between leading models and laggards proves that model design and execution make a real difference in results. And the comparison with the S&P 500 reinforces that experimental AI strategies can behave completely differently from broad market benchmarks, even when facing identical conditions.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah