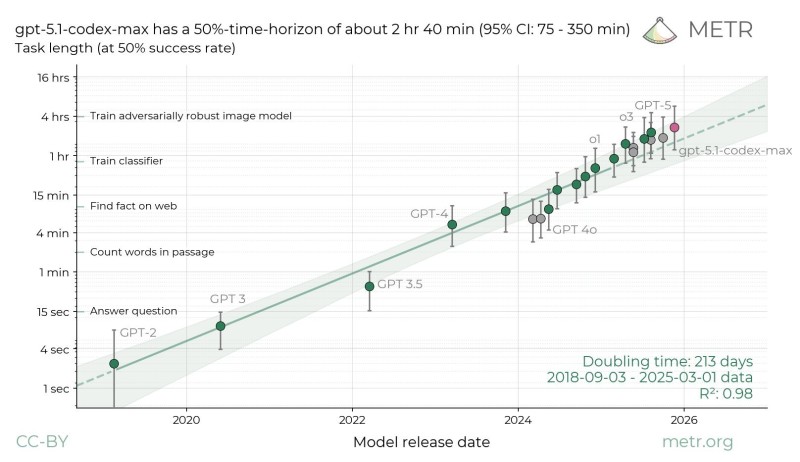

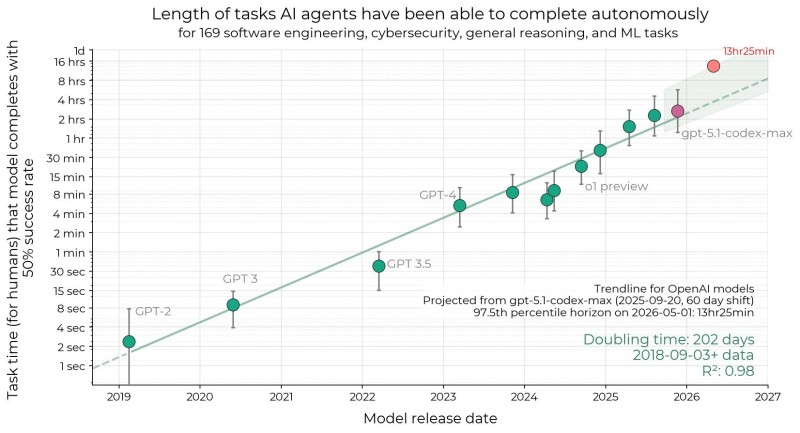

⬤ OpenAI's GPT-5.1-Codex-Max has broken new ground in autonomous AI performance, hitting a record 2 hours and 42 minutes for reliable task completion. Fresh data from METR places the model at the top of the organization's capability trendline, representing the longest autonomous-task duration they've published. The system demonstrates a 50% success rate at this time horizon, with confidence intervals ranging from 75 to 350 minutes.

⬤ The new model outperforms its predecessors across the board. GPT-5.1-Codex-Max leaves earlier versions like GPT-5, GPT-4, and GPT-3.5 behind on METR's timeline charts, along with intermediate o-series models that cluster at shorter durations. Testing shows superiority across software engineering, cybersecurity, reasoning, and machine learning tasks. This marks a clear jump forward in how long AI systems can work independently on complex, multi-step problems.

⬤ METR's analysis reveals a consistent pattern: OpenAI models have been doubling their autonomous capabilities roughly every 200-213 days since 2018. GPT-5.1-Codex-Max sits above the central trendline, performing at the upper end of what's expected. The positive news? Even with another six months of similar progress, future systems would remain below METR's thresholds for loss-of-control concerns. The model pushes boundaries while staying within current safety frameworks, showing that AI can handle longer, more sophisticated workflows without crossing into risky territory.

Peter Smith

Peter Smith

Peter Smith

Peter Smith