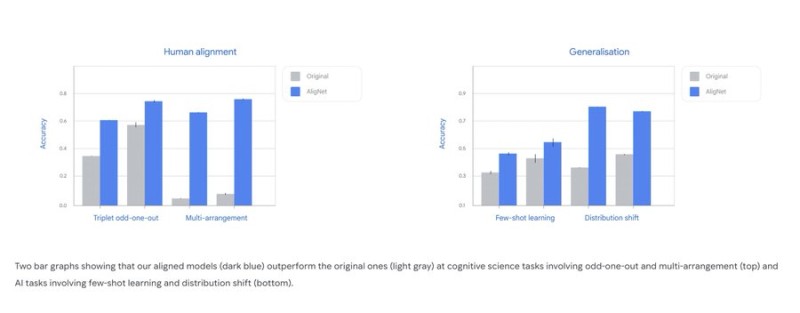

⬤ DeepMind's latest research shows that teaching vision models to group and interpret images the way people do makes them more intuitive and reliable. The goal is to build AI that "understands meaning, not just pixels." Bar charts comparing original models (light gray) with aligned versions (dark blue) reveal clear accuracy gains across multiple tests.

⬤ On human-alignment tasks—like picking the odd-one-out from a set of images or arranging visuals by similarity—the aligned models consistently outperform their original counterparts. These benchmarks test whether AI organizes visual information in ways that match human judgment, and the aligned versions do noticeably better.

⬤ The aligned models also shine on generalization tasks, beating the originals on few-shot learning and handling distribution shifts. That means they learn faster from limited examples and stay more reliable when the data looks different from what they were trained on—signs of more robust performance across varied conditions.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah