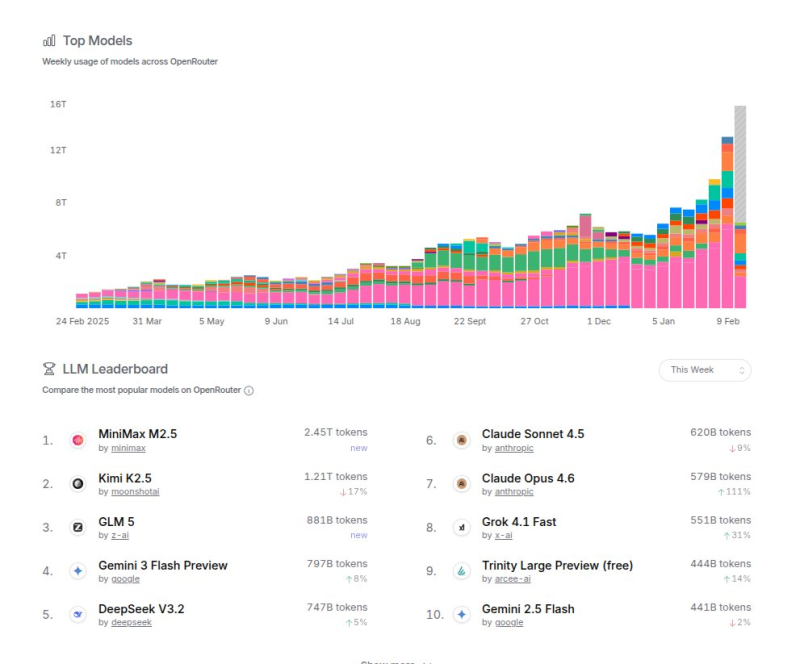

⬤ New OpenRouter usage data reveals significant concentration among Chinese AI models, as reported by Rohan Paul. Chinese systems collectively processed 5.3 trillion tokens out of roughly 8.7 trillion tokens across the top 10 models - representing about 61% of total usage. OpenRouter tracks how many text tokens users and applications send through each model, providing a real-world measure of adoption rather than just benchmark performance.

⬤ The leaderboard shows MiniMax M2.5 leading weekly usage with 2.45 trillion tokens, followed by Kimi K2.5 at 1.21 trillion tokens, and GLM 5 at 881 billion tokens. Other major players include Gemini 3 Flash Preview, DeepSeek V3.2, Claude Sonnet 4.5, and Claude Opus 4.6. The weekly usage chart shows steady growth through late 2025 into early 2026, with overall token volume accelerating sharply near February.

The figures show that the majority of activity on OpenRouter flows through Chinese-developed models.

⬤ With over half of all tokens processed by systems like MiniMax, Moonshot AI's Kimi series, and GLM models, the platform's usage distribution highlights strong developer and application adoption. U.S.-based models including Claude and Gemini remain in the top rankings but represent a smaller share of total token throughput. Additional context can be found in Kimi K2.5 OpenRouter usage rankings and MiniMax M2.5 open-source model release.

⬤ Token share on infrastructure platforms has become a proxy for real deployment scale. High token concentration suggests growing integration into production applications, automated workflows, and AI-powered services. The OpenRouter data reflects not just popularity, but where large-scale workloads are actively being executed across the global AI ecosystem.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah