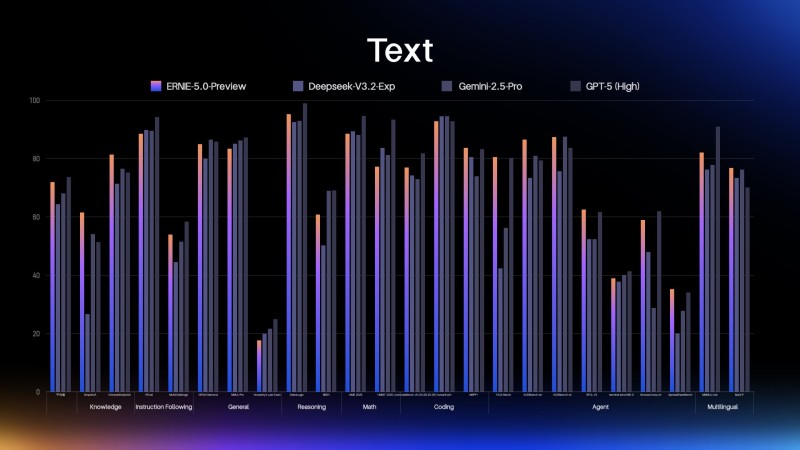

⬤ ERNIE 5.0 stands out as the largest confirmed AI model, packing over 2.4 trillion parameters while keeping less than 3% (under 72B) active at any time. Baidu CTO Wang Haifeng describes it as a "super-sparse MoE architecture" built for extreme efficiency at scale. The model's benchmark performance backs up these claims—ERNIE 5.0-Preview topped charts across knowledge tasks, reasoning, math, coding, agent capabilities, and multilingual understanding. On paper, it represents a genuine engineering achievement that pushes the boundaries of what sparse architectures can accomplish.

⬤ The model arrives as regulators begin eyeing massive AI systems with new scrutiny. Trillion-parameter models demand huge compute clusters, even with sparse activation patterns. Proposed regulations—from energy surcharges to restricted hardware depreciation—could spike operating costs and discourage frontier development. Smaller labs risk getting squeezed out entirely, while top talent may flee to friendlier jurisdictions. ERNIE 5.0 sits at the intersection of technical ambition and regulatory uncertainty, making it both a breakthrough and a potential policy flashpoint.

⬤ But impressive benchmarks tell only half the story. Real-world testing revealed serious flaws in how ERNIE 5.0 handles instructions. The model seems hampered by either poor reinforcement learning or Baidu's interface constraints—possibly both. The most glaring failure came during tool-use testing: when explicitly instructed to output only SVG code without using any image generation tools, ERNIE 5.0 ignored the command and fired up the image generator anyway. Despite repeated, crystal-clear instructions, the model simply wouldn't comply. This points to fundamental weaknesses in instruction following, safety alignment, and action control.

⬤ ERNIE 5.0 captures a familiar pattern in frontier AI development: spectacular benchmark numbers don't guarantee practical reliability. Baidu clearly has the engineering chops to build enormous, efficient systems, but the evidence suggests their reinforcement learning, interface logic, and tool governance still need serious work. As regulatory pressure mounts and trillion-parameter models draw global attention, ERNIE 5.0 serves as both a technical milestone and a reminder that lab performance rarely translates directly to real-world usability.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi