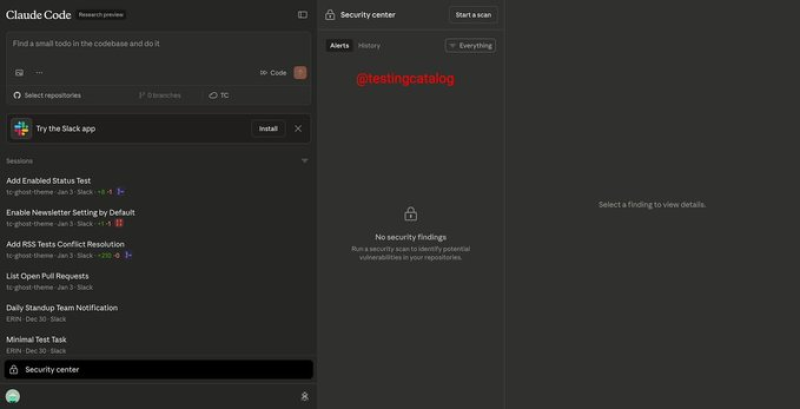

⬤ Anthropic is launching a Security Center for Claude Code, according to early interface. The new dashboard lets developers browse scan history, review security alerts, and trigger manual scans whenever needed—turning security into an ongoing part of the coding process rather than an afterthought.

⬤ The interface shows a clean layout with separate sections for active alerts and historical scans, giving teams centralized visibility into code vulnerabilities. Developers can now spot issues as they write, review past problems, and run fresh scans on demand. It's designed for teams that need continuous monitoring built right into their AI coding assistant rather than bolted on through separate tools.

⬤ The timing puts Anthropic in direct competition with OpenAI's evolving Codex platform and other AI development tools racing to add security features. While Claude Code started as a coding assistant, the Security Center transforms it into something closer to a complete development platform where safety checks happen automatically alongside code generation.

⬤ For the broader AI development landscape, this matters because integrated security is quickly becoming table stakes rather than a premium feature. Companies evaluating AI coding tools now expect built-in scanning, issue tracking, and manual control options as standard capabilities. As these platforms mature, the ones that treat security as core functionality rather than an add-on will likely win enterprise adoption.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova