⬤ Alibaba has rolled out RTPurbo, a game-changing optimization for long-context language models that tackles one of AI's biggest challenges: processing lengthy documents without burning through massive computing resources. The innovation comes from the company's RTP-LLM research team and builds on a straightforward insight—when an AI model works through long texts, only a handful of its attention mechanisms actually need to see the full document. The rest can work just fine with smaller, localized chunks of information.

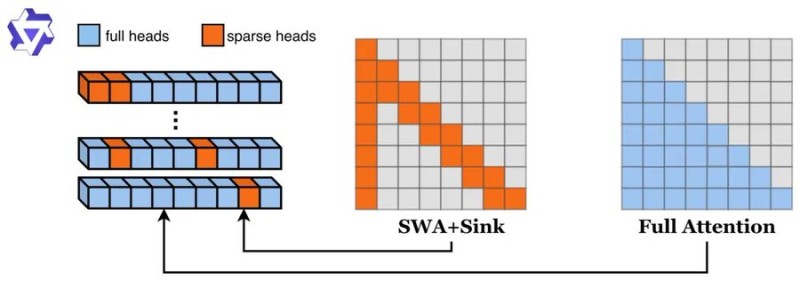

⬤ Here's how it works: instead of forcing every attention head to process the entire context (which gets expensive fast), RTPurbo identifies the roughly 15% of heads that really matter for long-range understanding and gives them full access. The remaining heads operate with much smaller context windows, cutting overall compute demand to about the same 15% level while keeping the model's performance essentially unchanged.

⬤ The results speak for themselves. Alibaba says RTPurbo delivers a 5x compression in attention compute compared to traditional approaches. On long-text benchmarks, it matches the performance of full-attention systems while beating other compression methods in raw efficiency. The technique's elegance lies in its simplicity—rather than complex workarounds, it just focuses resources where they actually make a difference.

⬤ This matters because compute cost is still the biggest roadblock in AI deployment. For companies running AI services at scale, these efficiency gains could completely reshape the economics. If Alibaba successfully integrates RTPurbo across its platforms, it could handle much larger contexts without proportional increases in hardware spending. That's particularly important as energy costs and capital expenditure limits become harder constraints across the tech sector.

⬤ The broader takeaway: as AI capabilities push into longer documents, conversations, and datasets, architectural innovations like RTPurbo might prove just as important as raw computing power. Alibaba's approach suggests that smarter resource allocation, not just bigger data centers, will increasingly separate leaders from followers in the AI race.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah