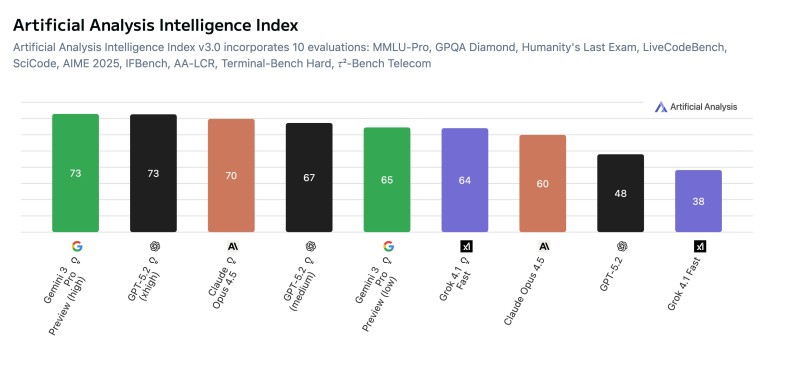

⬤ Artificial Analysis just dropped updated results for its Intelligence Index v3.0, which stacks up leading AI models across 10 different tests. The rankings show some interesting patterns—and also highlight how confusing things are getting with all these model variants floating around. Right now, Gemini 3 Pro Preview (High) and GPT-5.2 Thinking X-High are both sitting at the top with a score of 73, while Claude Opus 4.5 Ω comes in at 70 and GPT-5.2 Thinking Medium hits 67.

⬤ The benchmark pulls from a bunch of different evaluation tests including MMLU-Pro, GPQA Diamond, Humanity's Last Exam, LiveCodeBench, SciCode, AIME 2025, IFBench, AA-LCR and Terminal-Bench Hard. What's really notable is how much scores can vary within the same model family. Take GPT-5.2 for example—while the Thinking X-High version ties for first place, the Thinking Medium drops to 67, and the regular non-Thinking version falls way down to 48. Similarly, Gemini 3 Pro Preview (Low) scores 65, Grok 4.1 Fast ranges wildly from 64 down to 38 depending on configuration, and even Claude Opus 4.5 shows up twice with scores of 70 and 60.

⬤ This fragmentation is making life harder for users trying to figure out which model to actually use. The problem gets worse when platform interfaces use different naming than what shows up in benchmark reports. Like, what exactly does "Thinking X-High" mean when you're choosing between options like Light, Standard, Extended or Heavy? Claude Opus 4.5 keeps things simpler with a single clear configuration—and it still ranks near the top on both the intelligence index and the related agentic performance index.

⬤ Why this matters: how clearly benchmarks communicate performance directly shapes how people perceive and choose AI models in both business and everyday settings. As companies keep rolling out multiple versions and performance tiers, straightforward labeling and consistent messaging are becoming crucial factors in adoption rates, competitive dynamics and what people expect from AI capabilities going forward.

Peter Smith

Peter Smith

Peter Smith

Peter Smith