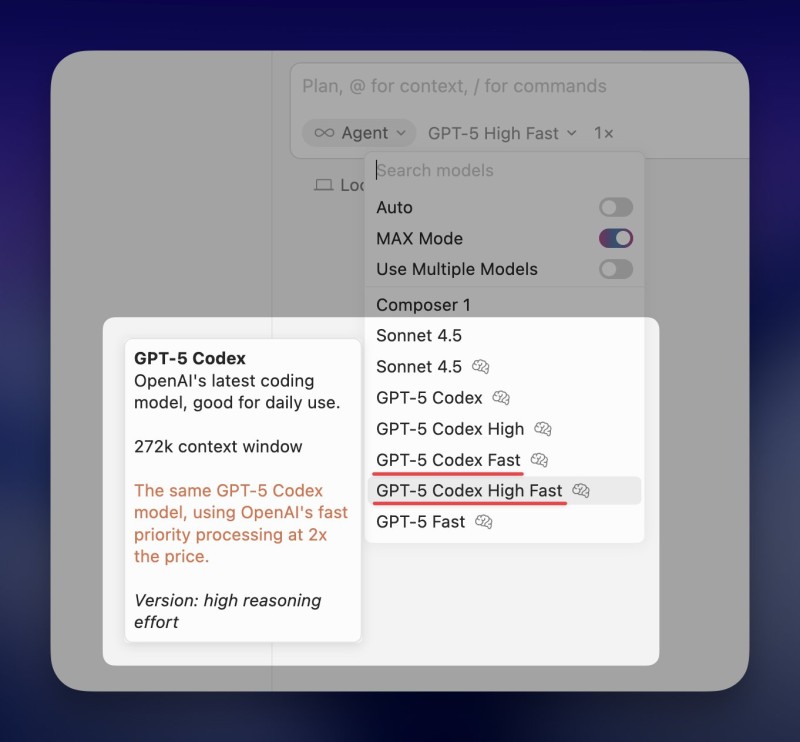

⬤ Cursor, the AI-powered coding platform, just launched two speed-focused versions of OpenAI's GPT-5 Codex: FAST and High FAST. These new models are designed for developers who'd rather finish faster than save a few bucks, cutting coding time dramatically on heavy projects.

⬤ Both models run on OpenAI's GPT-5 Codex with a massive 272k-token context window — one of the biggest available for code generation. The FAST tiers use OpenAI's priority processing, which doubles the speed at roughly double the price. For developers running large-scale builds, that trade-off makes sense.

⬤ The value proposition is straightforward: tasks that normally take an hour now wrap up in 30 minutes. For professional developers juggling iterative builds, debugging marathons, or continuous integration pipelines, time savings often matter more than token costs. When you're racing against deployment deadlines, paying extra to move faster is a no-brainer.

⬤ This release is part of Cursor's broader ambition to be more than just a coding assistant. With features like multi-model switching, extended context reasoning, and MAX Mode, the platform lets engineers dial in exactly the performance they need for each specific task.

⬤ The launch also signals where the AI coding market is heading: speed-tiered services that let teams decide their own balance between latency, reasoning power, and budget. It's a flexible approach that acknowledges different projects have different priorities.

⬤ The bottom line? For many developers, especially those working professionally, time really is more valuable than money. Cursor's new FAST models bet on that philosophy — and they're probably right.

Usman Salis

Usman Salis

Usman Salis

Usman Salis