⬤ Black Forest Labs just dropped FLUX.2 Klein on Hugging Face, and it's the fastest model they've built yet. This thing handles both image creation and editing in one package, cranking out results in under a second while running on roughly 13GB of VRAM. That means you can actually use it on hardware you probably already own.

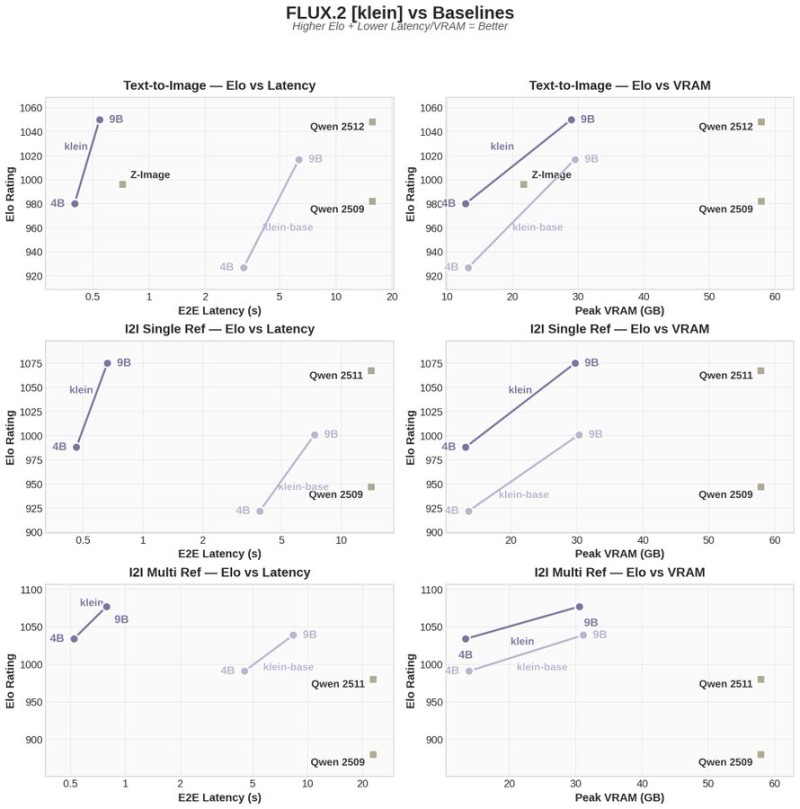

⬤ The benchmark data tells a pretty clear story. FLUX.2 Klein consistently beats competing models in the speed department while keeping quality high. When you look at the Elo ratings versus latency, it's sitting in that sweet spot—faster processing times without sacrificing output quality. It's not just throwing more compute at the problem; it's actually doing more with less.

⬤ Here's where it gets interesting for most users: the VRAM requirements. At around 13GB, you can run this on an RTX 3090 or RTX 4070 without breaking a sweat. Compare that to other models demanding way more memory, and FLUX.2 Klein is pulling higher quality scores while using significantly less resources. Whether you're doing single-image or multi-reference work, it's holding its own against models that need twice the hardware.

⬤ This release signals where generative AI is actually headed—smarter optimization instead of just bigger models. FLUX.2 Klein proves you don't need enterprise-level GPUs to get professional results. With an Apache 2.0 license backing it up, developers and researchers can actually build with it freely, which matters more than people realize in pushing the technology forward.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah