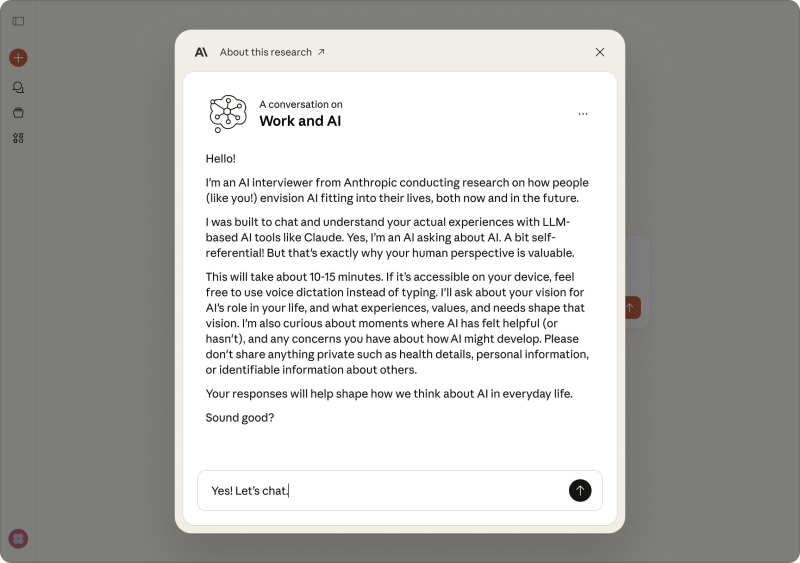

⬤ Anthropic rolled out an AI interviewer that digs into how people actually use AI systems on the job. The automated tool runs structured interviews exploring where AI adds value and where it falls short. The project offers a data-backed snapshot of real-world AI adoption across Anthropic's models.

⬤ The interviewer gathered responses from 1,250 workers spanning general workforce roles, creative positions, and scientific fields. Among general workers, 86% said AI saves them time and 65% felt satisfied overall. But there's a flip side: 69% feel social pressure around AI use, 55% experience anxiety, and 48% expect their roles to shift toward supervising AI rather than doing hands-on work. Workers claimed they use AI 65% for augmentation and 35% for automation, but actual Claude chat logs told a different story—47% augmentation and 49% automation. That gap shows people don't always realize how much they're leaning on AI for full task completion.

⬤ Creative professionals reported even stronger results, with 97% saying AI saves time and 68% seeing better output quality. Still, 70% face judgment from peers, pointing to ongoing debates about authenticity and creative ownership. Many creatives feel caught between AI's efficiency and industry expectations around originality.

⬤ Scientists took a more cautious approach, mostly limiting AI to literature reviews and coding help. Trust was the main roadblock—79% cited reliability concerns with AI output, while 27% pointed to technical limits. Despite hesitation, 91% want future AI systems that can handle hypothesis generation and experiment design, capabilities current models can't deliver consistently.

⬤ Anthropic's AI interviewer reveals a workplace in transition. The data shows a widening gap between how people think they use AI and how they actually use it, rising expectations for oversight roles, and strong demand for more reliable, specialized AI tools as organizations go deeper into AI-assisted work.

Usman Salis

Usman Salis

Usman Salis

Usman Salis