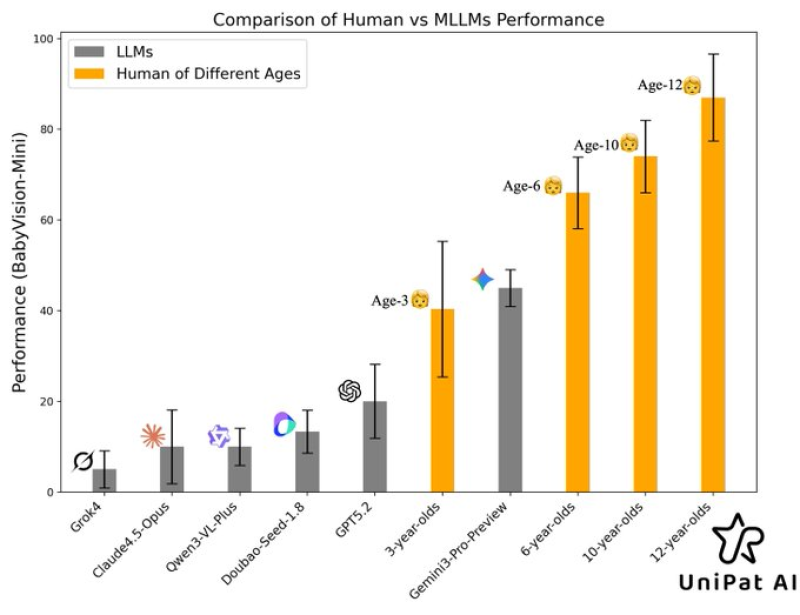

⬤ A team from UniPat AI and Peking University created BabyVision, a testing system that measures how well AI can actually "see" and reason visually. Unlike typical tests, this one strips away all language hints—models have to rely purely on what they can perceive through images, things like recognizing patterns and understanding spatial relationships. The researchers compared major AI systems against real children of different ages, and the results tell an interesting story.

⬤ The numbers reveal a significant gap. Gemini 3-Pro, the best-performing AI model, matches the visual reasoning of an average three-year-old. Six-year-olds, however, beat it by roughly 20 percent. Older kids aged 10 and 12 leave current AI systems even further behind, showing just how sophisticated human visual understanding becomes as we develop.

⬤ What's striking is how tightly grouped the AI models are. GPT-4, Claude, Qwen, and other vision-capable systems all score within a narrow band, and all fall well short of even young school-age children. By removing language from the equation, BabyVision exposes a weakness that often gets masked in standard tests where verbal reasoning can cover for limited visual skills.

⬤ This matters because visual reasoning sits at the heart of real-world AI applications like robotics, self-driving systems, and anything requiring machines to navigate physical spaces. The results suggest that while multimodal AI has advanced quickly, genuinely understanding what cameras capture remains a tough problem. BabyVision gives researchers a clearer target for where improvements are actually needed.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova