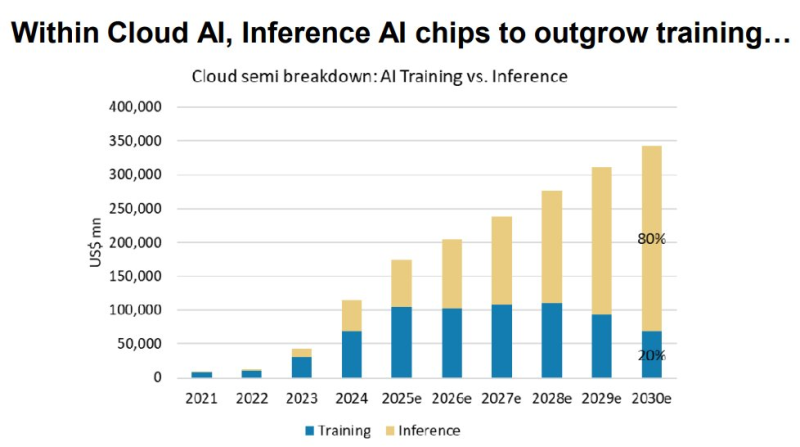

⬤ Global demand for AI inference computing power in cloud data centers is poised to surpass AI training demand over the next several years, according to Morgan Stanley Research. This marks a fundamental shift in AI workloads as the industry transitions from building massive foundation models to deploying them at scale in real-time production environments. The evolution is creating fresh opportunities for chipmakers specializing in efficient, cost-optimized inference hardware.

⬤ Cloud AI semiconductor spending is forecast to surge from 2024 through 2030, with inference gradually dominating the spending mix. By 2030, inference is expected to consume roughly 80% of total AI chip expenditures, while training drops to approximately 20%. This disparity stems from inference powering continuous streams of user interactions and enterprise applications, contrasting sharply with the concentrated, high-intensity compute bursts characteristic of AI model training.

⬤ Training and inference workloads operate on fundamentally different purchasing logic. Training demands completing a handful of massive compute jobs as rapidly as possible, typically resulting in brief periods of concentrated spending. Inference runs around the clock, which means buyers prioritize cost per output, throughput, memory capacity, power efficiency, and predictable latency. These economic realities are driving increased adoption of more efficient chip designs—including ASIC-based solutions—alongside traditional AI accelerators.

⬤ The anticipated shift toward inference-dominated demand signals a potential realignment in AI hardware strategy across cloud providers and enterprise users. As inference captures the lion's share of AI compute spending, hardware decisions will likely emphasize operating efficiency, predictable performance, and long-term scalability rather than peak training speed. The economics of AI deployment—not just model development—appear set to play an increasingly influential role in shaping the semiconductor landscape throughout the coming decade.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah