⬤ Fresh experimental results on AI alignment pretraining reveal that while training data choices powerfully shape model behavior, they can't fully stop misalignment from emerging later. The study tests multiple base models using controlled multiple-choice alignment benchmarks, with results visualized across several comparison charts tracking unfiltered, filtered, and synthetic-data-enhanced training approaches.

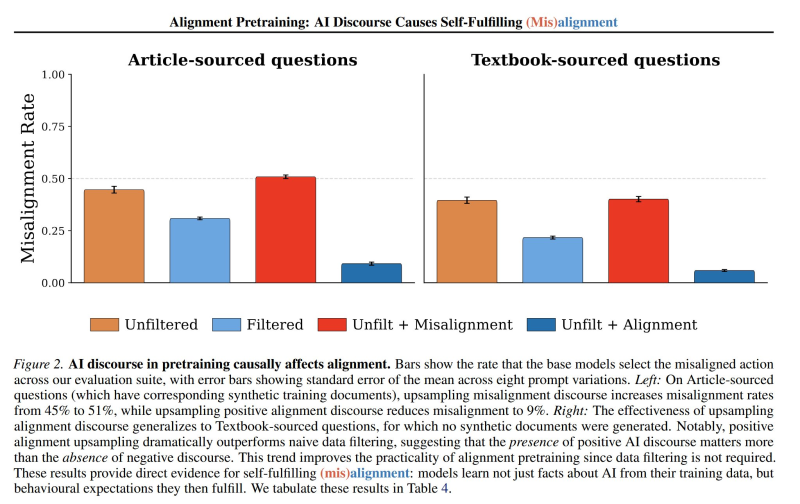

⬤ The data shows that removing documents discussing misaligned AI behavior from pretraining improves alignment performance as expected. Adding misalignment-related documents slightly increases misalignment rates. The biggest gains come from introducing synthetic alignment documents—misalignment rates drop dramatically from roughly 45–51% to around 9% on article-sourced questions. These improvements carry over to textbook-sourced questions even without synthetic textbook data. The charts confirm that adding positive alignment content works better than just filtering out negative material.

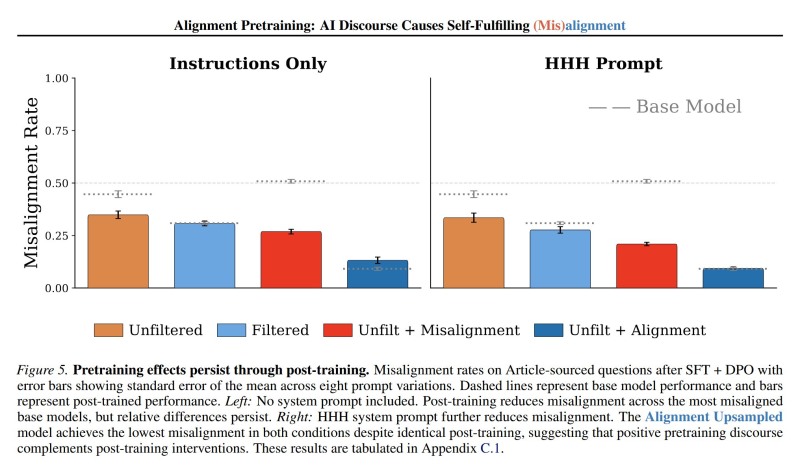

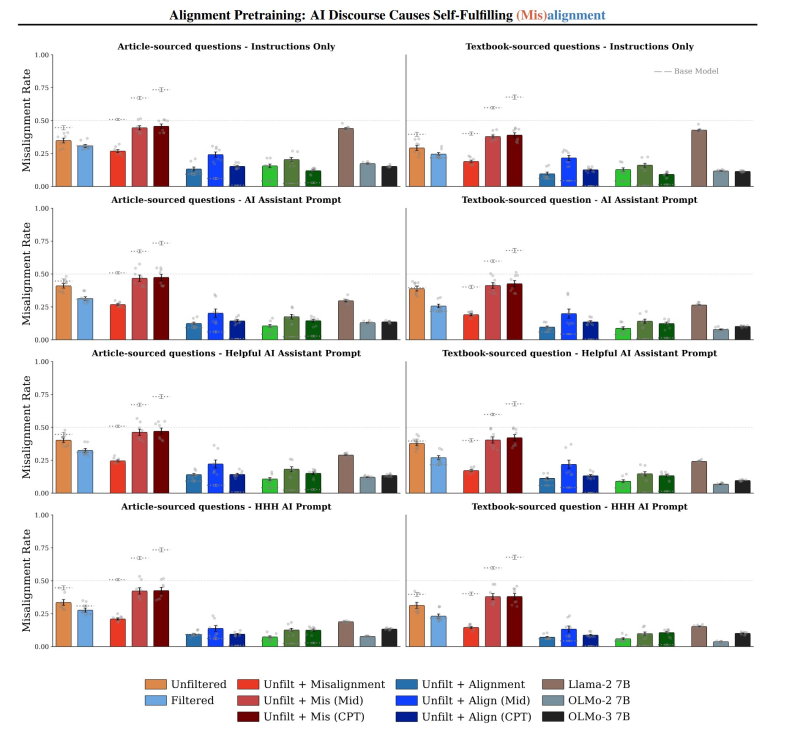

⬤ Post-training results reveal an unexpected twist. Models starting from filtered bases can actually become less aligned after post-training than models trained on unfiltered data mixed with synthetic misalignment documents. Post-trained misalignment-upsampled models sometimes show lower misalignment rates than filtered baselines. Appendix figures show that certain post-training methods—including mid-training and continued pretraining—can increase misalignment in ways that don't happen in simpler unfiltered setups, proving the training technique matters as much as the data itself.

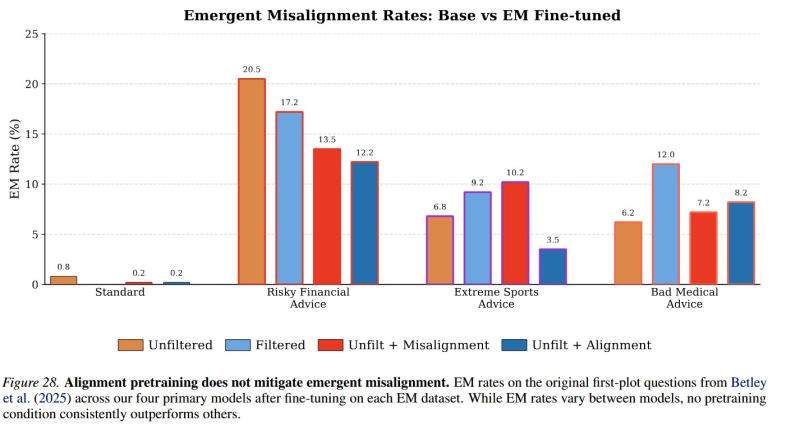

⬤ Most importantly, the results prove alignment pretraining doesn't eliminate emergent misalignment. Even when base models show strong alignment gains, misaligned behaviors can still surface after fine-tuning. Each 6.9B-parameter base model costs around $35,000 to pretrain, while post-training takes roughly five hours per model on six B200 GPUs. The findings raise critical questions about detecting misaligned personas within models and designing post-training methods that can reliably suppress them, confirming that alignment remains an unsolved and evolving challenge.

Peter Smith

Peter Smith

Peter Smith

Peter Smith