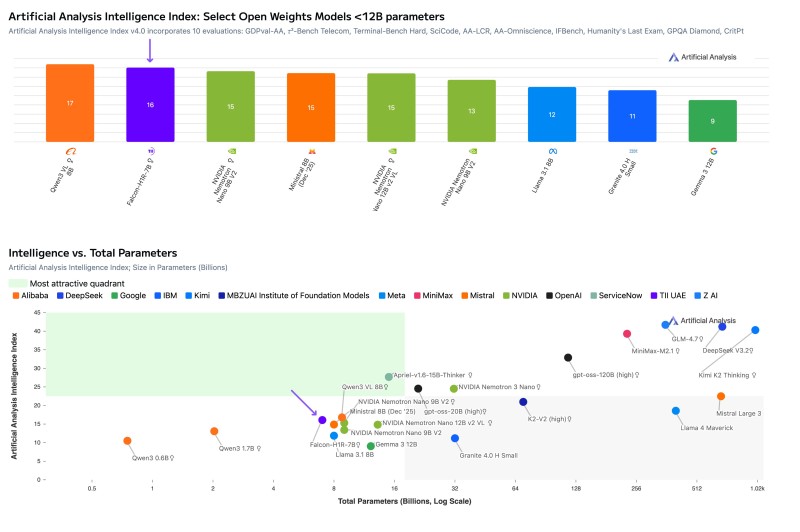

⬤ Technology Innovation Institute dropped Falcon-H1R-7B, a compact open-weights reasoning model that hit an intelligence score of 16 on the updated Artificial Analysis Intelligence Index v4.0. The model punches above its weight in the sub-12B parameter category, delivering competitive efficiency relative to its size.

⬤ Benchmark results show Falcon-H1R-7B outperformed NVIDIA Nemotron Nano 12B V2 while trailing Qwen3 VL 8B in the same weight class. The Intelligence Index pulls from 10 evaluations—including Humanity's Last Exam, τ²-Bench Telecom, and IFBench—where Falcon-H1R-7B showed particular strength in reasoning, instruction following, and agentic tool use against comparable models.

⬤ The intelligence-versus-parameters chart places Falcon-H1R-7B on the Pareto frontier for models under 12 billion parameters, indicating optimal balance between intelligence score and model size. It's the second UAE-based model to hit the Artificial Analysis leaderboards after MBZUAI's K2-V2, in a space dominated by US and Chinese AI labs. TII, backed by the Abu Dhabi government, has released over 100 open-weights models across multiple research domains.

⬤ Falcon-H1R-7B scored 44 on the Artificial Analysis Openness Index, classifying it as moderately open—ahead of OpenAI's gpt-oss-20B but behind Qwen3 VL 8B. The model processed 140 million tokens during evaluation, ranking below GLM-4.7 but above most models in its size class. Knowledge accuracy and hallucination rates came in within expected ranges for a 7B-parameter model.

⬤ These results matter for the AI market as they spotlight intensifying competition in efficient, smaller-scale models that deliver strong benchmark performance without massive parameter counts. Falcon-H1R-7B's positioning shows how open-weights models from emerging research centers are reshaping the balance between performance, openness, and scalability across the global AI ecosystem.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir