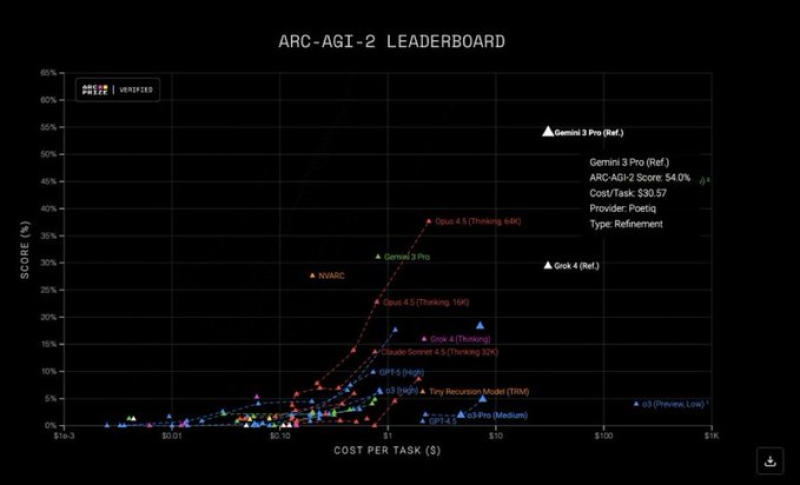

⬤ Google's Gemini 3 Pro jumped from the middle of the pack to the front on the ARC-AGI-2 reasoning test, almost doubling the old record. Poetiq wired Gemini 3 into their refinement pipeline and the model reached a confirmed 54 %. The 2025 ARC-AGI-2 chart shows slow, steady gains through the year then a straight up jump the moment Gemini 3 Pro began testing in December.

⬤ The leaderboard places Gemini 3 Pro far above its rivals in both accuracy and cost. It costs about thirty dollars per task plus beats NVARC, Opus 4.5 (Thinking), Claude Sonnet 4.5 (Thinking) and Grok 4. No other system has crossed the fifty percent line - earlier models stalled in the single digits or low teens. The December leap looks like a change in method, not a routine tweak.

⬤ The recipe blends recursive, neuro symbolic designs with heavy test time compute and fast generate test loops that run Python logic inside sandboxes until an answer appears. ARC-AGI-2 probes abstract reasoning on unseen tasks - shortcuts based on memory fail. Gemini 3 Pro already sits at 54 %; if the trend continues, the long mentioned human-level mark of 85 % could appear within months.

⬤ Those numbers matter because they show the reasoning frontier is moving fast. Leading labs now favor compute heavy hybrid designs that adapt to unfamiliar puzzles instead of merely growing model size. When systems reliably top benchmarks built to resist memorization, markets begin to price in wider automation. That change can ripple through AI but also semiconductor stocks as expectations reset to the new capability level.

Usman Salis

Usman Salis

Usman Salis

Usman Salis