⬤ ScienceAI Bench just dropped Day 2 results from its DailyBenchmark series, and the differences between AI models are getting harder to ignore. The update brings in fresh contenders like Deepseek 3.2, Gemini 3 Flash, and Kimi K2 Thinking, all tested on pharmacokinetic parameters that matter most in early drug development—how compounds move through the body, how long they stick around, and how quickly they're eliminated.

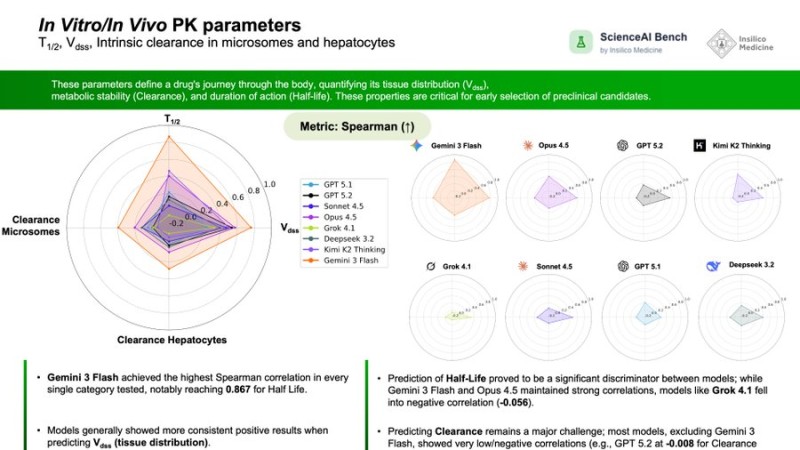

⬤ The testing ground? Pharmacokinetics data from the Therapeutic Data Commons ADMET datasets. Models had to predict half-life (T1/2), volume of distribution at steady state (Vdss), and intrinsic clearance in microsomes and hepatocytes. Performance was measured using Spearman correlation to see which models could actually rank predictions accurately. Gemini 3 Flash swept every category, hitting a standout 0.867 correlation for half-life prediction.

⬤ Half-life prediction turned out to be the real test that separated the contenders from the pretenders. While Gemini 3 Flash and Opus 4.5 kept strong positive correlations, Grok 4.1 actually went negative at minus 0.056. But clearance prediction? That's where most models hit a wall. Excluding Gemini 3 Flash, nearly every model posted extremely low or negative correlations—GPT 5.2 managed minus 0.008 for microsomal clearance. Vdss predictions were more stable across the board, with most models maintaining positive correlations.

⬤ The takeaway is clear: today's AI models are good at some PK tasks but still stumble badly on others. Strong half-life and Vdss performance can't mask the ongoing struggle with clearance prediction, which is crucial for understanding metabolic stability. As ScienceAI Bench keeps adding models and datasets, this benchmark gives the research community a transparent way to track where AI actually works in drug discovery and where it still needs serious work.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov