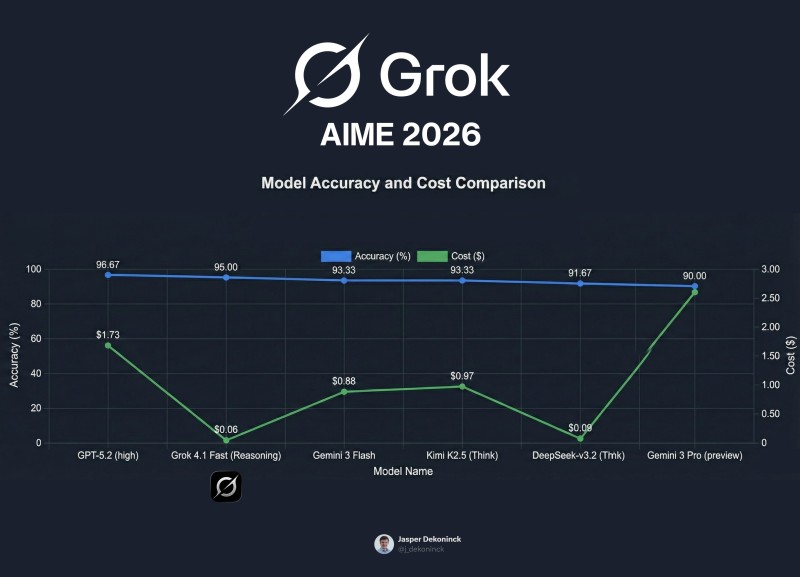

⬤ xAI's Grok model just dropped impressive new numbers on the AIME 2026 MathArena benchmark, showing it can handle advanced math reasoning without breaking the bank. DogeDesigner shared results showing Grok 4.1 Fast hit 95% accuracy at only $0.06 per inference—basically neck-and-neck with GPT-5.2's performance but at a dramatically lower cost.

⬤ The comparison chart tells the story: GPT-5.2 scored 96.67% accuracy but cost around $1.73 per inference. Grok matched that performance level while charging 29 times less. Other heavy hitters in the test included Gemini 3 Flash (93.33% accuracy, $0.88 cost), Kimi K2.5 (93.33%, $0.97), and DeepSeek-v3.2 (91.67%, $0.09). Gemini 3 Pro preview landed at 90% accuracy with the highest price tag among tested models.

The cost-performance gap here is striking—we're seeing near-identical reasoning capability at radically different price points,

⬤ The AIME evaluation measures advanced mathematical reasoning in large language models, making these cost-performance metrics critical for anyone thinking about large-scale AI deployment. When you're running thousands or millions of inferences, that 29× cost difference between Grok and GPT-5.2 becomes a massive operational consideration.

⬤ Bottom line: pricing efficiency is becoming just as important as raw performance in the AI model wars. When a model can deliver 95% accuracy instead of 96.67% but at less than 4% of the cost, that changes the economics of AI infrastructure completely. For companies scaling AI applications, Grok's combination of strong reasoning and aggressive pricing could shift deployment decisions—especially for math-heavy tasks where that 1.67% accuracy difference might not justify paying 29 times more per query.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir