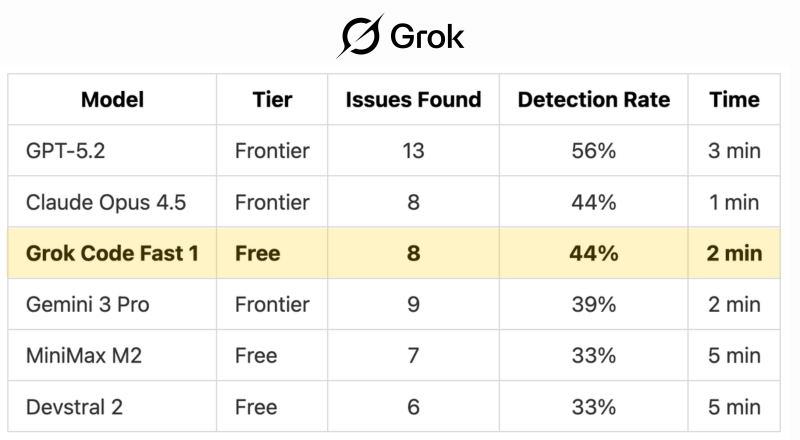

⬤ Recent testing shows Grok Code Fast 1 performing as well as Claude Opus 4.5 in finding bugs. The comparison involved six AI models tackling real-world code review tasks in Kilo Code, measuring how many software issues each could catch within a set timeframe.

⬤ GPT-5.2 led the pack by finding 13 issues at a 56% detection rate in three minutes. Claude Opus 4.5 spotted eight issues with a 44% detection rate in just one minute. Grok Code Fast 1, despite being a free-tier model, matched that same 44% accuracy by finding eight issues in two minutes—only one minute slower than Claude.

⬤ Gemini 3 Pro detected nine total issues but achieved only a 39% detection rate in two minutes, putting it behind Grok Code Fast 1 in actual effectiveness. The other free models, MiniMax M2 and Devstral 2, each found fewer issues with a 33% detection rate and needed five minutes to finish.

⬤ These results show that free and lower-cost AI coding tools are closing the gap with premium models in practical development work. When a free-tier model can match a top-tier option in accuracy while staying competitive on speed, it signals real progress for developers looking for capable, cost-effective code review assistance.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah