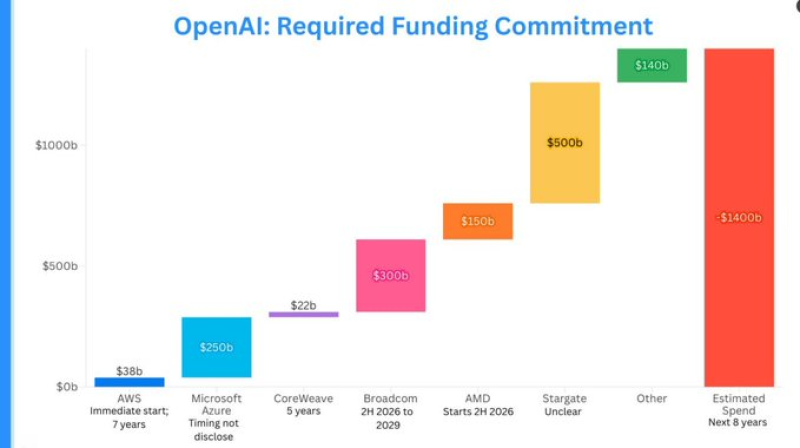

⬤ OpenAI is staring down some seriously big numbers. Market estimates suggest the company could need up to $1.4 trillion in total funding by the early 2030s just to keep its AI systems running — covering compute, infrastructure, and day-to-day operations. Here's the thing: the bulk of that isn't just software. It's physical, capital-heavy stuff like cloud capacity and data centers.

⬤ A huge chunk of that money goes straight to cloud computing. We're talking massive multi-year deals — think Microsoft Azure and other major providers — plus new spending waves hitting from 2026 onward as the models get bigger and hungrier for compute. Training and running next-gen AI isn't cheap, and the bills are only going up.

If this spending trajectory plays out, OpenAI would become one of the largest long-term consumers of cloud capacity and advanced semiconductors on the planet.

⬤ Chips and hardware are another massive line item — we're looking at hundreds of billions tied to advanced accelerators, custom silicon, and the data center infrastructure needed to power it all. Power, cooling, networking — none of it is cheap, and OpenAI needs it all running at scale to stay competitive.

⬤ OpenAI isn't just an AI company anymore — it's becoming one of the biggest infrastructure plays in tech. This kind of capital-intensive growth is reshaping the entire AI sector, where the real race isn't just about smarter models. It's about who can build the physical foundation to support them.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi