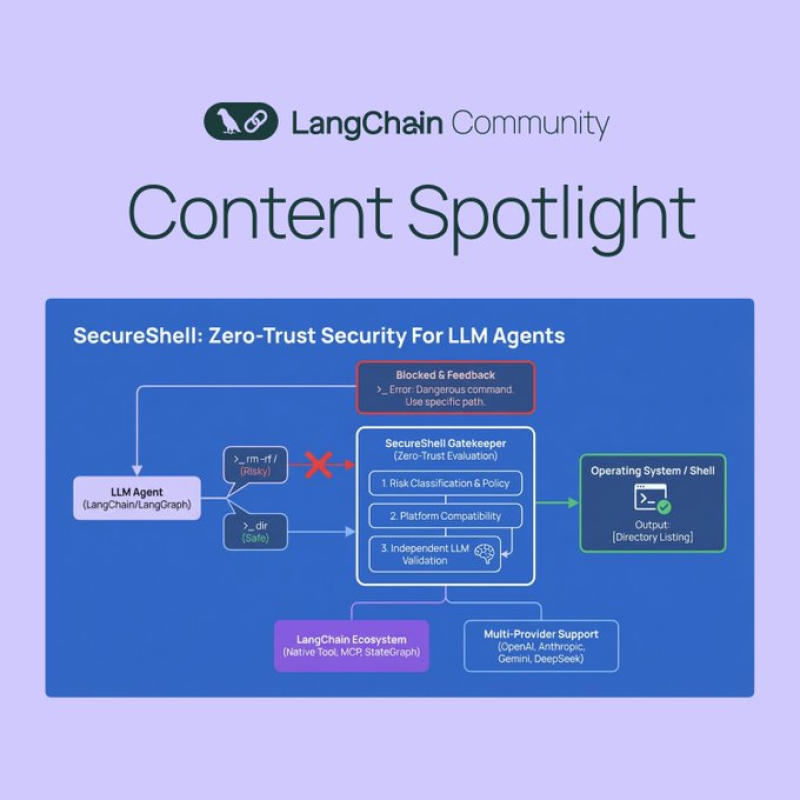

⬤ LangChain's open-source community just gave SecureShell its own spotlight moment — and honestly, it's overdue. The tool does one thing really well: it stops LLM agents from running shell commands that could cause real damage. Before anything hits the operating system, SecureShell steps in and validates it. Think of it as a bouncer at the door between your AI agent and your system.

⬤ Here's the thing — SecureShell runs on a zero-trust model, meaning nothing gets through automatically. Every command goes through risk classification, policy checks, and independent verification before it's allowed to execute. Risky instructions get blocked on the spot. It's a structured validation flow, and it's exactly what you'd want running under the hood when an AI agent has access to your OS.

⬤ The best part? You don't need to rebuild your whole setup to use it. SecureShell plugs directly into LangChain and LangGraph as a drop-in integration. It also supports 4 major AI providers out of the box — OpenAI, Anthropic, Gemini, and DeepSeek — making it a provider-agnostic security layer that fits into pretty much any existing agent workflow.

⬤ As LLM agents get more capable and start handling more complex tasks autonomously, tools like SecureShell are becoming essential. Validating commands at execution time and enforcing security policies isn't optional anymore — it's the baseline. This spotlight is a sign that the LangChain ecosystem is taking agent safety seriously.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah