Fresh research is reshaping how we think about AI agent design. Instead of building bigger models, scientists are exploring how agents can simulate outcomes before acting—a shift that could define the next generation of artificial intelligence systems.

Why Current AI Agents Struggle with Long-Term Planning

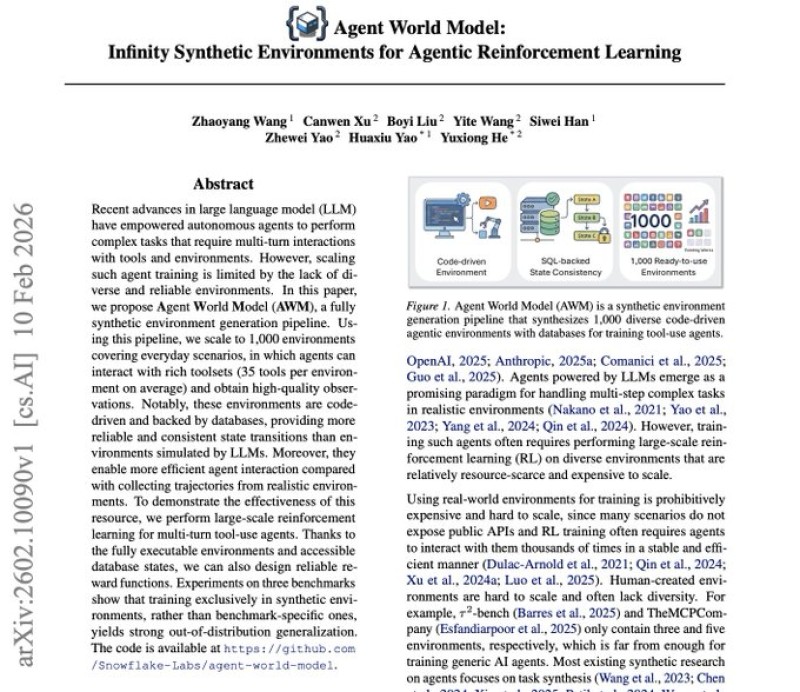

A research paper titled Agent World Model: Infinity Synthetic Environments for Agentic Reinforcement Learning lays out a new architectural layer for AI systems. Today's agents don't fail because they're dumb—they fail because they lack an internal, structured understanding of the world they're working in.

Most agents right now operate in a simple loop: see a prompt, spit out a response. The proposed world model flips that into a reasoning cycle where the system observes, simulates possible futures, weighs outcomes, and only then acts. The paper describes agents building persistent environment states, predicting what happens next, and updating their beliefs over time. This design moves closer to coordinated multi-agent execution seen in Anthropic Claude Code deploying multiple AI agents working together.

How World Models Fit into the AI Stack

Researchers stress this isn't about making base language models bigger. It's about restructuring how thinking unfolds across time steps—combining perception, memory, planning, and action into one continuous process. As one researcher noted, "The goal is stable long-term decision making in dynamic environments, not reactive responses."

The concept aligns with broader architecture layers outlined in an AI stack breakdown where agentic AI orchestrates generative models, positioning reasoning coordination as a higher-level system component rather than a model-level tweak.

The study signals a transition from reactive tools toward systems that anticipate outcomes before acting. By simulating consequences internally instead of responding step by step, agents inch closer to the kind of forward-thinking decision making that could reshape how future AI systems are designed and deployed.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi