The artificial intelligence landscape is witnessing a notable shift as smaller, more efficient models challenge the assumption that bigger is always better. ModelScope's latest release proves that smart architecture can trump raw size, delivering impressive results with a fraction of the computational resources typically required.

3B Model Delivers 76.9 Coding Score Against Larger Competitors

ModelScope introduced the Nanbeige4.1-3B language model, a lightweight system that punches well above its weight class. The model comes equipped with a 256k context window, built-in deep search agent capability, and a two-stage reinforcement learning pipeline that prioritizes correctness before optimizing for speed.

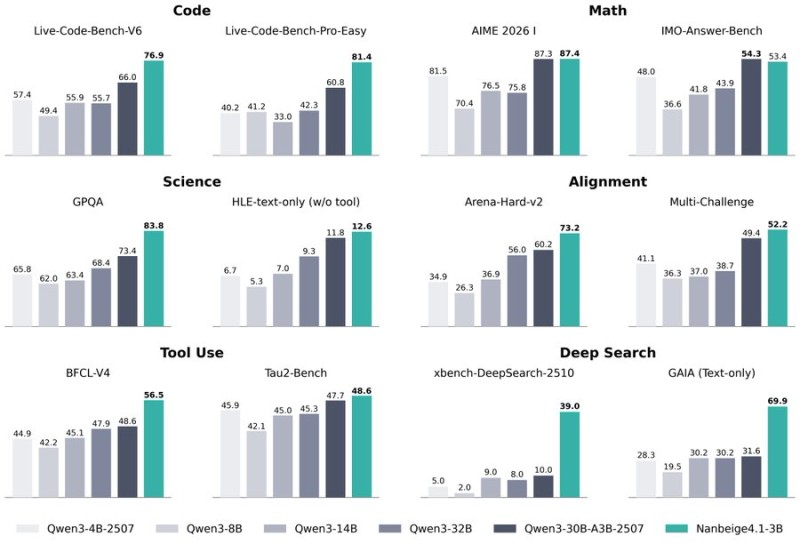

The numbers tell a compelling story. In coding challenges like Live-Code-Bench-V6, the model hit 76.9, while mathematics testing on AIME 2026 yielded an impressive 87.4 score. For alignment evaluation using Arena-Hard-v2, the system achieved 73.2, and in deep search GAIA text-only testing, it recorded 69.9.

"The model outperformed Qwen3-32B in several key benchmarks despite operating with far fewer parameters," according to the announcement.

How Reinforcement Learning Drives Smaller AI Models

What makes this achievement particularly interesting is the approach. Rather than simply scaling up, the architecture combines reinforcement learning optimization and agent-style search to boost reasoning efficiency. This isn't just about doing more with less—it's about doing it smarter.

The trend extends beyond this single release. Similar efficiency gains appeared in MiniMax M2.5 open-source model results, where compact systems demonstrated competitive performance on complex tasks that previously required much larger architectures.

Efficiency Over Scale: The New AI Development Paradigm

This development suggests that future performance gains in artificial intelligence may increasingly come from refined architecture and training techniques rather than simply expanding parameter counts. For companies deploying AI systems, this shift could mean lower infrastructure costs, reduced energy consumption, and faster inference times—all while maintaining or even improving output quality.

The implications ripple across the broader AI ecosystem, potentially reshaping deployment strategies, cost structures, and infrastructure demands. As models like Nanbeige4.1-3B continue proving that efficiency can match or exceed brute-force scaling, the industry may be entering a new era where innovation matters more than size.

Peter Smith

Peter Smith

Peter Smith

Peter Smith