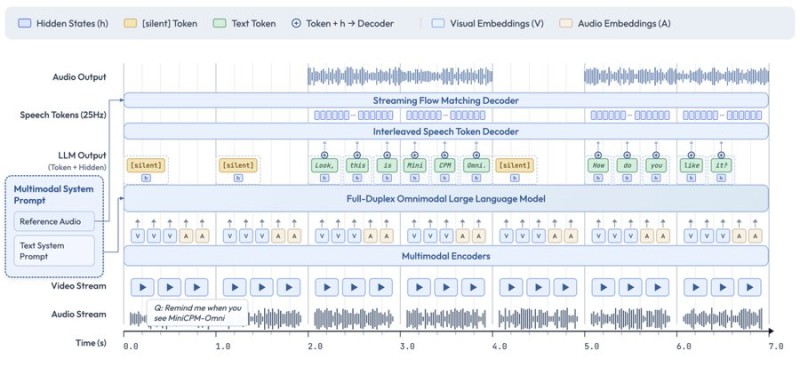

⬤ OpenBMB just dropped MiniCPM-o 4.5 on ModelScope, and it's turning heads for what it can do with a relatively small footprint. This 9B-parameter model uses an end-to-end full-duplex design that handles real-time interaction across multiple input types simultaneously. Instead of throwing more parameters at the problem, the team focused on smarter architecture to punch above its weight class.

⬤ What makes this model different is how it processes information. MiniCPM-o 4.5 runs continuous video and audio streams in parallel without one blocking the other, maintaining awareness across both channels at once. It doesn't just wait for you to ask questions either—the model can jump in with observations based on what it's seeing and hearing in real time, making interactions feel more natural and responsive.

⬤ The numbers back up the design choices. On the OpenCompass vision-language benchmark, MiniCPM-o 4.5 scored 77.6, outperforming models with significantly more parameters.

⬤ As demand grows for AI that can handle audio, video, and text seamlessly in real time, compact models like this become increasingly relevant. MiniCPM-o 4.5 shows that architectural innovation—full-duplex processing, proactive interaction, parallel perception—can deliver serious results without the computational overhead of larger alternatives. For developers working on multimodal applications, this approach could represent a more practical path forward than simply waiting for the next generation of 100B+ parameter models.

Usman Salis

Usman Salis

Usman Salis

Usman Salis