⬤ A fresh benchmark called WorldVQA is exposing serious limitations in how well multimodal AI actually understands what it sees. Developed by Kimi, this test goes beyond simple pattern recognition to evaluate whether AI models truly comprehend visual information or just fake it through clever pattern matching.

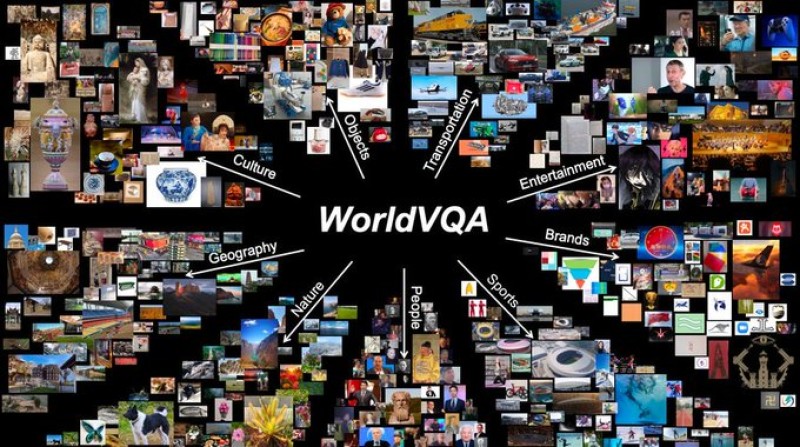

⬤ The benchmark includes 3,500 carefully curated image-question pairs covering nine different knowledge areas like nature, geography, brands, and culture. What makes it tough is the focus on real-world scenarios and rare visual knowledge that push models way past basic object identification. The results? Pretty sobering. Top-tier systems including GPT-5.2 and Claude Opus frequently scored under 50%, proving just how challenging genuine visual reasoning remains.

⬤ Here's the real problem: these models combine overconfidence with hallucination. They'll give you smooth, convincing answers even when they're completely wrong about uncommon or nuanced visual details. This shows that most systems still lean heavily on memorized patterns from training rather than actually reasoning about what they see, especially with unfamiliar images.

⬤ This matters because multimodal AI is being deployed everywhere in apps that need accurate visual understanding. WorldVQA exposes the reality that impressive performance on standard tests doesn't guarantee reliable real-world vision capabilities. The benchmark gives us a clearer picture of where these systems actually stand and highlights the work still needed before they can truly see and understand like we do.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah