⬤ AI research community buzz is pointing to something interesting: coding models are starting to help build themselves. Developers are noticing that systems like Codex now handle big chunks of their own development work, and Claude Code is actually contributing to creating its next version. It's a real shift in how AI tools are being used during model development.

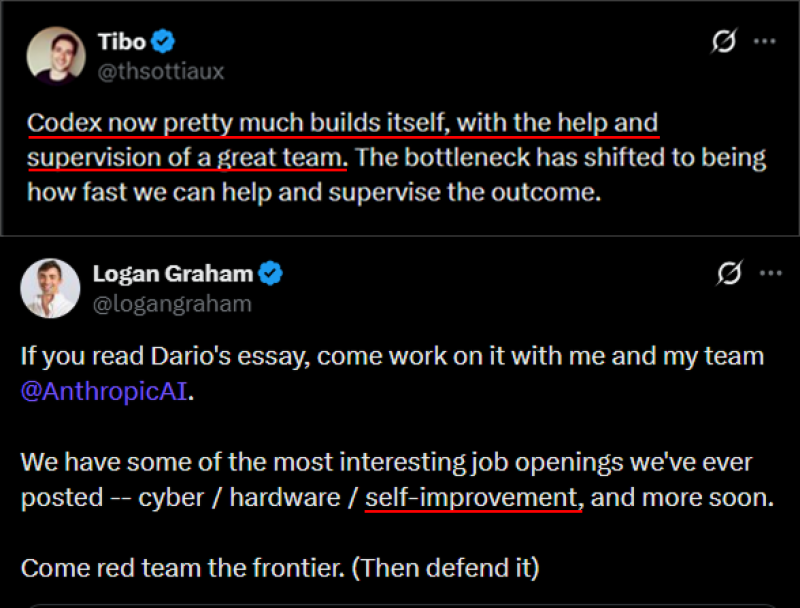

⬤ The accompanying image shows AI practitioners making it clear that humans are still running the show. One comment mentions Codex thrives with strong human supervision—the bottleneck now isn't writing code, it's how fast teams can guide and review what the AI produces. Another post highlights Anthropic's active hiring push for engineers in safety, hardware, and model robustness.

⬤ Bottom line: AI systems are definitely helping improve their own tooling, but the loop isn't closed yet. Human oversight remains critical for supervising outputs, validating improvements, and steering future iterations. The original tweet emphasizes we're seeing early signs, not fully autonomous self-improving systems.

⬤ Why this matters: even partial recursive improvement can speed up development cycles and change how AI products evolve. But the ongoing need for human supervision highlights the real challenges around safety, reliability, and control as AI systems get more involved in their own improvement processes.

Peter Smith

Peter Smith

Peter Smith

Peter Smith