⬤ OpenAI is hitting a GPU wall nearly every day—a sign that the company's infrastructure is struggling to keep up with explosive growth. Until major new compute capacity arrives next year (possibly through the "Stargate" project or similar expansions), each new feature launch puts more strain on already-stretched resources. The issue isn't a lack of demand—it's that demand is massively outpacing supply.

⬤ The scale imbalance is striking: OpenAI reportedly has far more users than Google or Meta, but operates with significantly less compute power. That gap is at the heart of the current crunch. New features like the GPT-5 "thinking model" for free users are exciting developments, but they're also GPU-hungry—widening the resource shortfall even as they drive engagement.

⬤ Sora is another major factor. The video generation tool requires serious GPU horsepower, forcing OpenAI to manage resources carefully and make tough tradeoffs. Between advanced reasoning models and media generation, the company's compute needs aren't static—they're climbing fast alongside product ambitions.

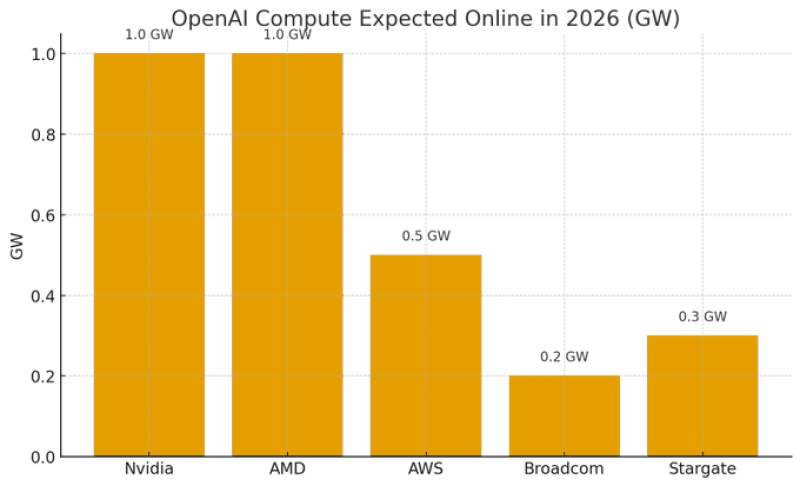

⬤ For investors and industry watchers, the message is clear: OpenAI's ability to roll out features and maintain service quality hinges on GPU availability. The company is racing to scale infrastructure, but until "Stargate" or another major compute buildout goes live, capacity management will be the defining constraint. Expect OpenAI headlines to keep circling back to this tension between user growth and hardware limits.

Peter Smith

Peter Smith

Peter Smith

Peter Smith