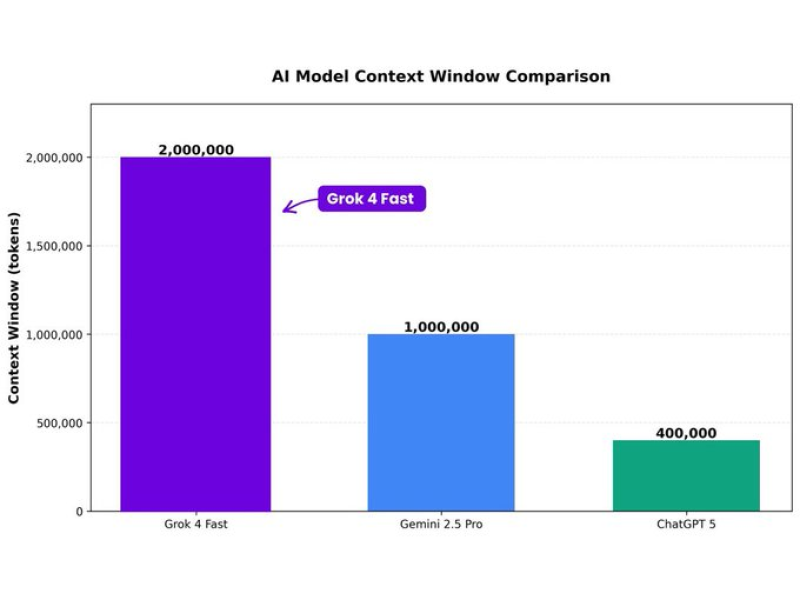

⬤ Grok 4 Fast has rolled out a groundbreaking 2 million-token context window, putting it way ahead of rivals like Gemini 2.5 Pro and ChatGPT 5. While Gemini handles up to 1 million tokens and ChatGPT manages 400,000, Grok's capacity is double and five times larger respectively. This makes it one of the most powerful context-handling AI models on the market right now.

⬤ The expanded window means you can load entire books, massive research collections, or huge code repositories into a single prompt. This fundamentally changes how developers, analysts, and research teams work—no more breaking things down into chunks or running multiple queries.

⬤ The Opportunity & Challenges: This shift opens up new possibilities for AI-driven work: instead of piecing together fragmented inputs, you can now work with one comprehensive prompt. Industries dealing with large document sets—think legal compliance or software auditing—stand to gain a lot. But there are real risks too: data security concerns, pressure on specialized jobs through automation, and potential talent loss as AI takes over more tasks. Companies will need solid governance to make sure loading proprietary data doesn't create new vulnerabilities.

⬤ What's really impressive is the performance. Grok 4 Fast reportedly runs with almost no memory issues while staying incredibly fast—something rare for models with huge context windows, which usually slow down or lose quality. Early testing hints at even more remarkable results ahead.

⬤ As context window size becomes a major battleground alongside reasoning power, cost, and multimodal features, Grok 4 Fast's 2 million-token capability gives xAI a serious edge in the increasingly competitive AI landscape.

Peter Smith

Peter Smith

Peter Smith

Peter Smith