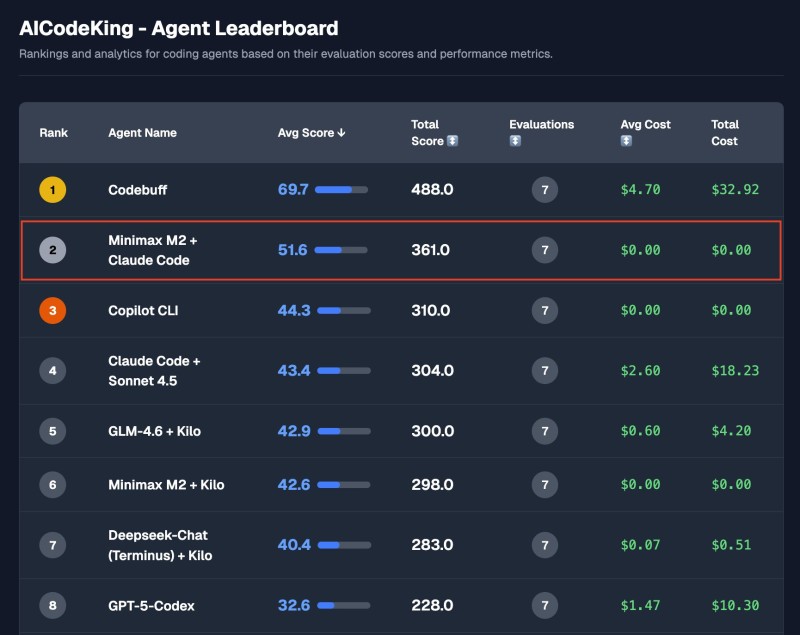

● According to a recent update from AI CodeKing, the MiniMax M2 + Claude Code combo has landed in second place on the AI CodeKing Agent Leaderboard. The hybrid agent scored an average of 51.6 and a total of 361.0 across seven tests—all at zero cost. It now sits just behind the leading Codebuff (average score 69.7) and ahead of competitors like GLM-4.6 + Kilo, Copilot CLI, and GPT-5-Codex.

● This result highlights how agentic programming is taking off, where AI coding models team up through shared reasoning and specialized tools. The MiniMax-Claude pairing "works much better with Claude Code's tools," pointing to strong compatibility. But there's a catch—multi-model setups can be tricky to deploy and may behave inconsistently across different tasks. That complexity could be a headache for enterprise teams if reliability isn't nailed down.

● Beyond accuracy, the leaderboard shows a striking cost difference. MiniMax M2 + Claude Code delivered top results at $0.00, compared to Codebuff's $32.92 or Claude Code + Sonnet 4.5's $18.23. That kind of cost efficiency could push developers toward open, modular AI stacks instead of pricey proprietary options—especially when compute budgets are tight.

● The updated KingBench evaluations reflect a bigger trend: composable AI architectures that mix reasoning, coding, and task orchestration for maximum flexibility. As hybrid frameworks like MiniMax + Claude prove themselves, they could reshape how coding agents are built and benchmarked across open-source and enterprise projects.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah