New AI models don't always arrive with fanfare. Sometimes they slip into public view through code commits, test files, and developer documentation long before any official announcement. This week, the AI community got exactly that kind of glimpse when references to "GPT-5.1-mini" surfaced in OpenAI's official repository, hinting at what might be coming next in the company's model lineup.

A Discovery in the Code

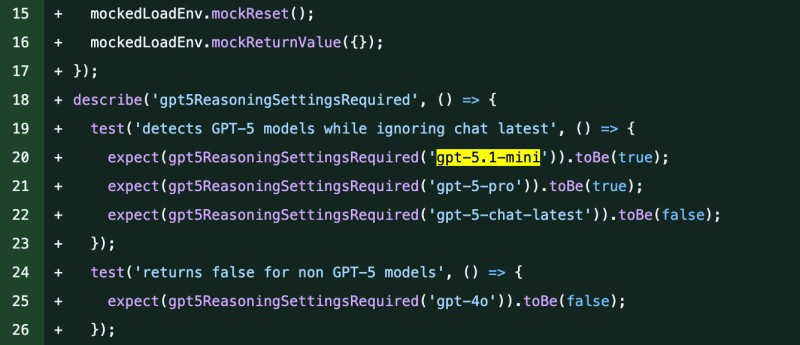

TestingCatalog News trader spotted the reference inside OpenAI's openai-agents-js GitHub repository. The mention appears in a test function called gpt5ReasoningSettingsRequired, which validates whether certain models need specific reasoning configurations.

The relevant line reads: expect(gpt5ReasoningSettingsRequired('gpt-5.1-mini')).toBe(true). This confirms that GPT-5.1-mini is being treated as a legitimate GPT-5-class model, sitting alongside entries like gpt-5-pro and gpt-5-chat-latest. It's a small detail, but it tells us that OpenAI's next-generation models are already being wired into their internal infrastructure.

What the Naming Suggests

The naming structure isn't random. The "5.1" designation indicates a minor iteration rather than a major leap, while "mini" signals a smaller, more efficient variant built for speed and cost optimization.

The same test file also references gpt-5-pro and gpt-5-chat-latest, which points toward a tiered model strategy similar to what we've seen from Google's Gemini lineup or Anthropic's Claude family. OpenAI seems to be building a modular ecosystem where different versions of GPT-5 serve different needs: one for power users, one for general chat, and now possibly one for lightweight, fast applications.

Potential Use Cases

If GPT-5.1-mini follows the pattern set by previous "mini" models, it would offer GPT-5-level reasoning at lower latency and reduced cost. That makes it particularly useful for AI agents and automation tools that need quick responses, developers working on local or hybrid deployments where efficiency matters, and businesses that want advanced reasoning without paying premium pricing. The timing also aligns with OpenAI's push into agentic workflows through its Agents SDK, a toolkit designed to help developers build autonomous applications that dynamically call multiple models and tools.

Part of a Larger Shift

OpenAI has moved away from big, single-model launches toward continuous, iterative releases. GPT-4 spawned GPT-4-Turbo and GPT-4o, each tailored for specific workloads. The appearance of GPT-5.1-mini suggests GPT-5 will follow the same path: regular updates, flexible configurations, and performance tiers that let users pick the right balance of speed, cost, and capability. This approach mirrors broader industry trends, where companies like Anthropic and Google are also releasing scalable versions of their core models to meet varying demands.

Peter Smith

Peter Smith

Peter Smith

Peter Smith