AI developers are comparing leading language models more intensely than ever, as performance differences increasingly determine real-world productivity. A recent statement from a well-known AI engineer sparked fresh debate over reliability versus speed, and why users are rapidly moving toward models that work correctly on the first try. This shift marks a new phase in how professional users evaluate AI tools.

The Reliability Revolution

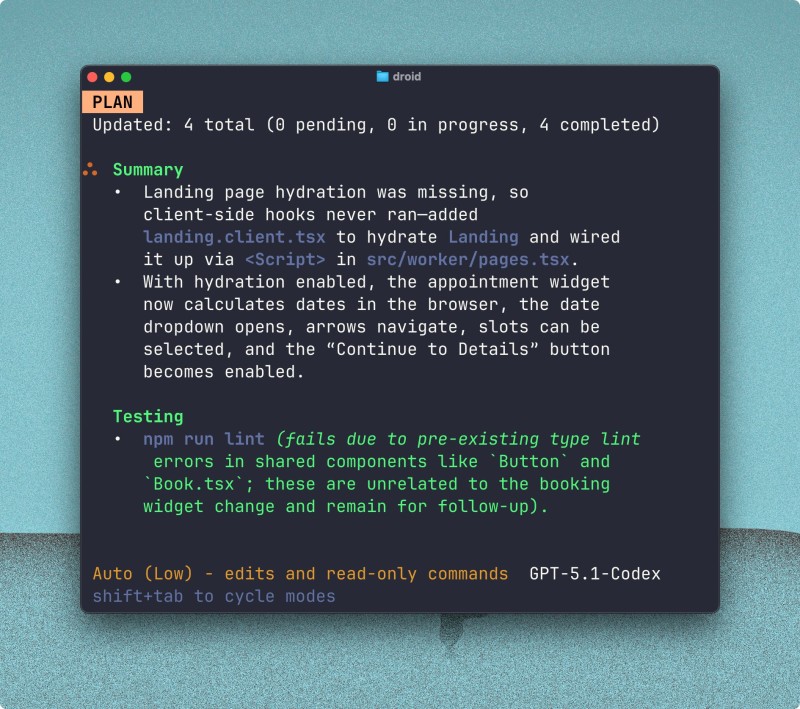

A respected AI developer and benchmarking enthusiast recently highlighted that GPT-5.1 performs so reliably that slower speed doesn't matter anymore because it simply does the job right the first time.

This observation resonated across the AI community and reignited conversations about what truly matters in modern AI models: raw speed or consistent, error-free output.

Why Reliability Beats Speed

For many developers and technical users, the biggest frustration with language models isn't latency but having to redo work because the model fails to follow instructions or produces errors. GPT-5.1 appears to dramatically reduce this friction, with users repeatedly reporting higher first-pass correctness, far more stable reasoning across multi-step tasks, better adherence to complex or structured instructions, and fewer hallucinations and logic mistakes. Even if GPT-5.1 takes a few seconds longer to respond, the total workflow becomes faster because it requires fewer retries and less correction.

How the Two Models Stack Up

Anthropic's Sonnet 4.5 is widely praised for its speed and efficiency. However, early testers note that GPT-5.1 surpasses it in reliability, especially for tasks involving coding, long-form reasoning, or chained logic. The key differences developers are highlighting include:

- GPT-5.1 introduces fewer logic and syntax errors

- It follows constraints more precisely

- Complex projects succeed on the first attempt more frequently

- Sonnet is faster but often needs more corrections

This leads many developers to conclude that GPT-5.1 saves more time overall, despite being slower per individual request.

What This Means for the AI Industry

As AI becomes integrated into professional workflows, from coding to research to automation, users are increasingly intolerant of errors that force rework. This trend hasmajor implications. Benchmarks may start prioritizing consistency and correctness over sheer speed. Developers and enterprises may favor models that reduce risk, not just accelerate output. Vendors may begin creating reliability-optimized model tiers, and higher first-pass accuracy may accelerate adoption in industries that require precision. GPT-5.1's reception suggests the beginning of a new standard focused on getting it right the first time.

The Future Is Accuracy-First

The positive reaction to GPT-5.1 signals a turning point. As users move from experimentation to real, high-stakes work, they value models that inherently reduce friction.

GPT-5.1 may be slower, but for many professionals, it offers something far more important: a model that delivers correct, reliable results on the very first attempt. If this trend continues, we may be witnessing the early stages of a reliability-first era in AI development, where accuracy, stability, and predictable outputs become the true benchmarks for next-generation models.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah